15 Assumption Testing Methods For Product Management Teams

In 2015, 116 founders of early-stage startups participated in a special training program in Italy. They were invited to 5 lectures and 5 mentorship sessions on building and scaling a tech company. But there was a hidden twist — half the participants were taught through the lens of the scientific method.

These ‘scientist’ founders learned how to use first-principles thinking to examine ideas and identify the underlying assumptions. They were trained to design experiments to test their assumptions, define their criteria for each experiment’s success or failure beforehand, and collect evidence to inform their next decision.

One year after the training program, the “scientist” founders had 6x higher average revenue than the control group (with a median difference of 1.8x).

Why would the scientific method lead to more revenue? Because being better at designing and running experiments means you find the right answer faster. The researchers concluded that “entrepreneurs who were taught to formulate hypotheses and rigorously test them on carefully chosen samples of potential customers were more likely to acknowledge that an idea was bad, pivot from non-starters or pitfalls, and generate more revenue.”

This guide covers everything a product team should know about assumption testing:

• Test your assumptions, not your ideas

• ‘Riskiest Assumption Test’ examples

• How to plan your assumption tests

• 15 assumption testing methods

• Defining success and failure

Let’s dive in…

Why the best teams test assumptions instead of ideas

Assumption testing forces us to acknowledge what we believe to be true about our idea and find ways to prove this veracity. It’s not the same as idea testing — we’re not trying to figure out whether our entire idea is viable or not, we’re checking whether the pillars our idea will be built on are as solid as we assume them to be.

This distinction between idea testing and assumption testing is important. The more time you spend working on an idea, the more likely you are to fall in love with it. We don’t get the same emotional attachment to assumptions because they’re just one little piece of our overall idea. It’s much easier to disprove an individual assumption than to kill an entire idea in one go.

If we were to try to test an entire idea, we would have to build a Minimum Viable Product (MVP). The problem with MVPs is right there in the name — “product.” MVPs offer the perfect excuse to skip assumption testing and start building your product instead. While you may intend for that initial MVP to be small in scope, it often shifts in definition to “Minimum Loveable Product” as you add more and more risky untested features into your product. The more you build, the harder it will be for you to kill a bad idea.

When we shift away from testing our idea and focus instead on assumptions, we replace the MVP with the RAT — Riskiest Assumption Test — which follows this three-step process:

List the assumptions that must be true for your idea to succeed.

Identify which individual assumption is most important for this success.

Design and conduct a test to prove whether this assumption is true or not.

In my opinion, the best part about the RAT is that it doesn’t require a full prototype or launch-ready product (in fact, it explicitly encourages otherwise!).

‘Riskiest Assumption Test’ Real Example

Case Study: DoorDash

In late 2012, the DoorDash founders were interviewing the owner of a macaroon shop in Palo Alto named Chloe. At the very last moment of the interview, Chloe received a call from a customer requesting a delivery and turned the order down. The DoorDash founders were mystified — why would she turn business away like that? It turned out that Chloe had “pages and pages of delivery orders” and “no drivers to fulfill them”. Over the following months, they heard “deliveries are painful” over and over while interviewing 200+ other small business owners.

The supply side of the market had clear potential — restaurant owners needed someone to look after these deliveries for them. But the DoorDash founders decided not to build anything for restaurant owners at the start. In fact, when they first launched, they didn’t even tell any of the featured restaurant owners!

In January 2013, the team created paloaltodelivery.com a single afternoon — a basic website with PDF menus from a handful of local restaurants and a phone number for placing orders. They charged a flat $6 fee for deliveries, had no minimum order size, and collected payments from customers manually in person.

The point of paloaltodelivery.com wasn’t to prove the potential of their idea to restaurant owners. The DoorDash founders knew their riskiest assumption was whether people would trust a 3rd party website for their food deliveries. This approach was unheard of at the time. If the assumption turned out to be incorrect, they would have to find a completely different way to solve restaurant owners’ delivery problem.

But that wasn’t how things went. The same day they created the website, they received their first order — a guy was searching Google for “Palo Alto Food Delivery” and found their website (via a cheap AdWords campaign they funded). Within weeks, the four founders were receiving so many orders, mostly from Stanford students, that they were struggling to keep up with demand. The experiment had proven their riskiest assumption to be true.

paloaltodelivery.com in May 2013, four months after its initial launch (via Wayback Machine)

How the best product teams PLAN their assumption tests

Assumption Boards

If you’ve ever taken part in a hackathon or Startup Weekend, you’ve likely been given a Lean Canvas to map your assumptions. For teams that are just starting on a new idea, the Lean Canvas often pushes people to invent assumptions by giving them boxes to fill that they hadn’t even considered before.

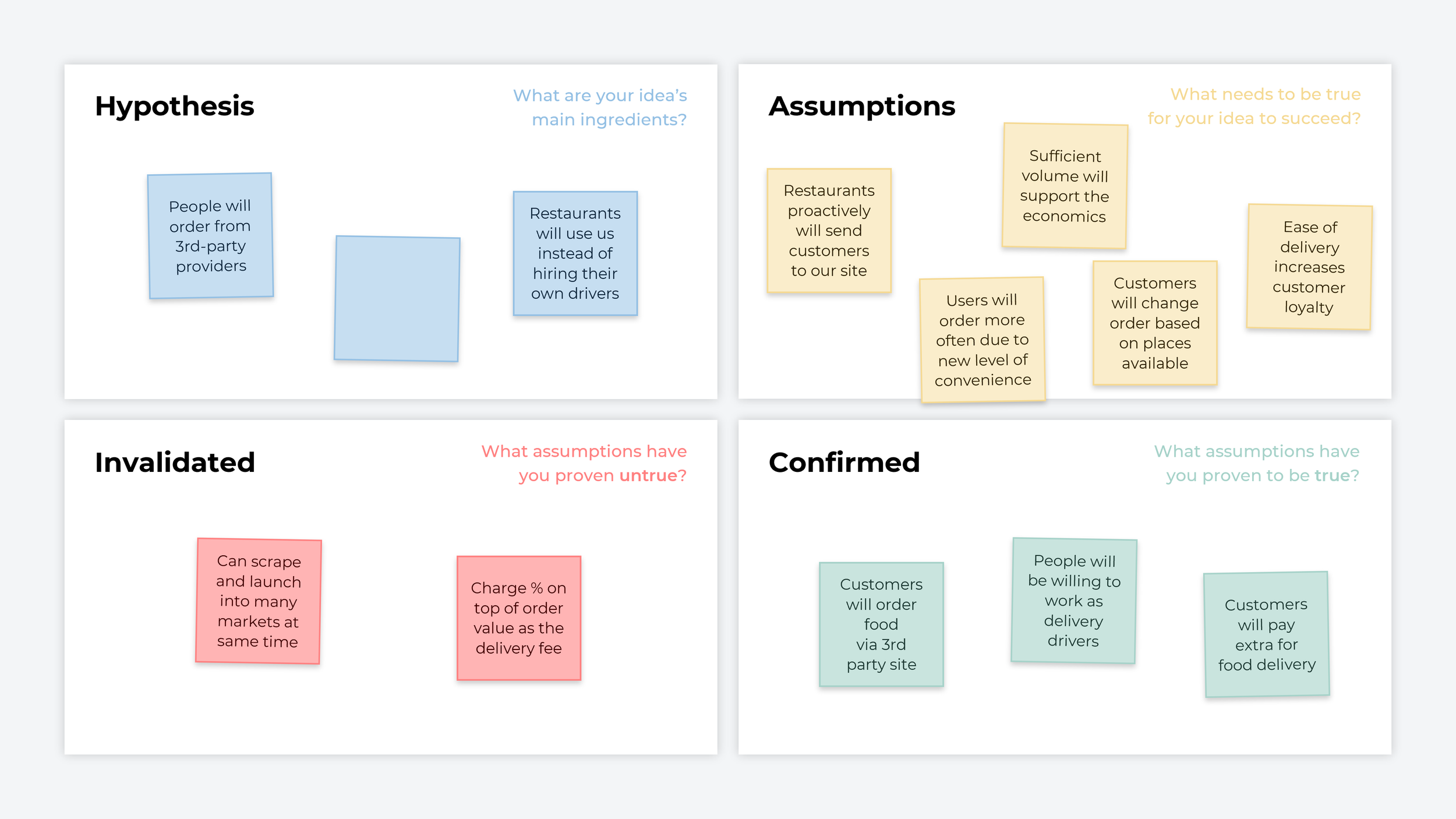

I personally prefer the more basic Assumption Board approach for early-stage teams — four boxes (“Hypothesis, Assumptions, Invalidated, and Confirmed”) for them to capture their assumptions, identify which are the riskiest, and track learnings from their tests as they derisk their idea.

Back when I was a Product Innovation Lead at Unilever, there were sprint weeks where we would complete full iterations of the assumptions board every day for five days straight — we’d start each morning by identifying our current riskiest assumption, by lunchtime we had started an experiment to test our assumption, and before 5pm we would update our assumption board with the result of our test. And we were inventing new types of ice cream, so you can’t claim this pace is too fast for software!

Assumption boards rely on your ability to identify your research hypothesis — here’s a great guide by Teresa Torres to help you with that.

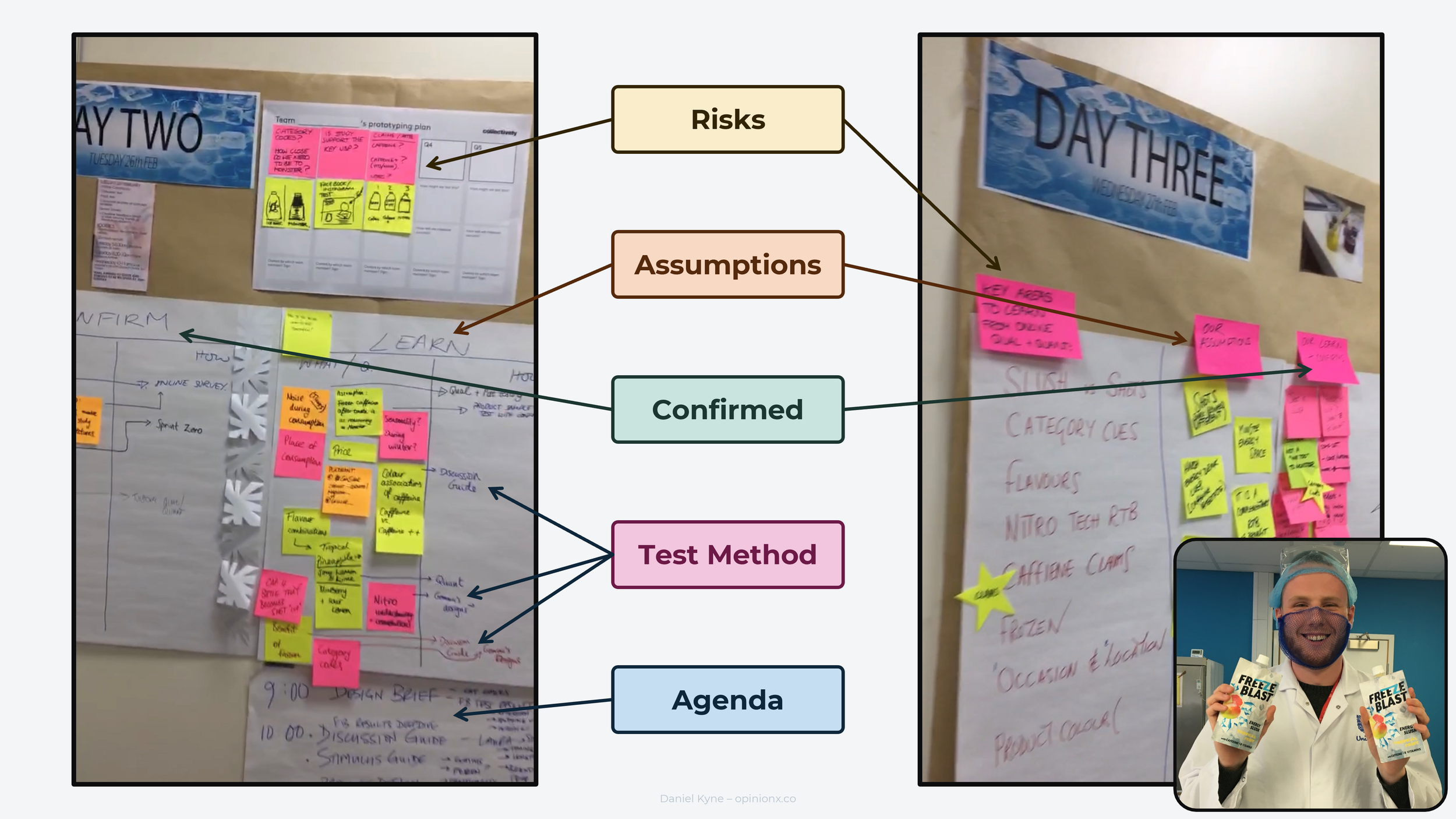

(Sorry for the blurry quality, these are screenshots of a video)

Opportunity Solution Trees

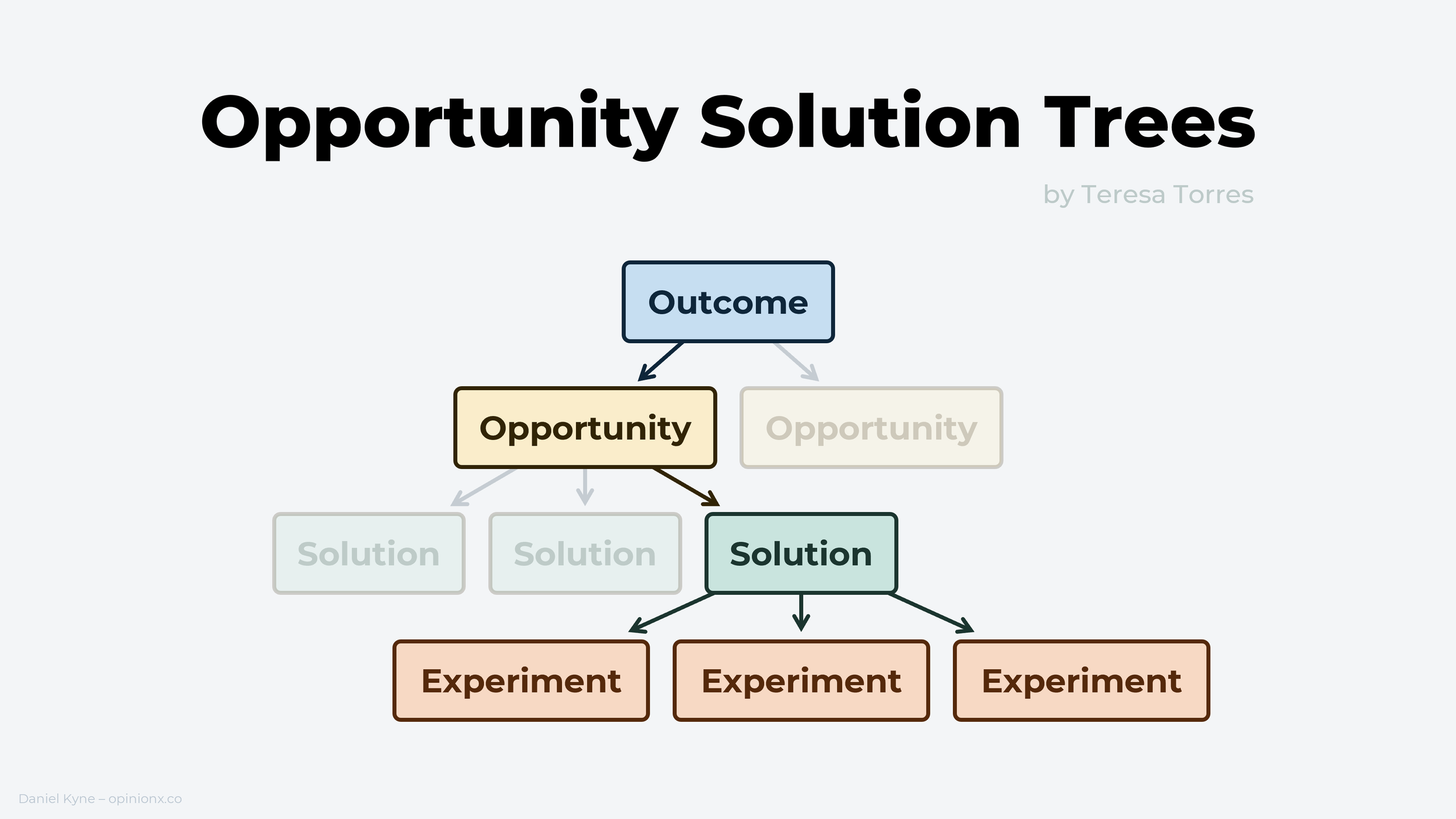

The Opportunity Solution Tree (OST) is a visual framework that helps product teams map their research space and figure out what to prioritize working on next. Instead of fixating on specific customer problems or feature ideas in isolation, the OST helps teams brainstorm the various customer opportunities (unmet needs, pains, desires), solutions that could address each opportunity, and experiments that test these solutions.

There are two hidden superpowers built into Opportunity Solution Trees. The first is called reframing, which takes what we’re fixated on (eg. a new feature ideas), identifies what that will accomplish (the customer problem your idea solves), and then brainstorms other ways to accomplish that same objective (other feature ideas). Learn more about reframing in my problem-brainstorming guide.

The second superpower, compare and contrast decisions, is more relevant to assumption testing. Research shows that the more ideas you generate, the better your ideas tend to be. The same goes for product decisions — we’re better at picking which customer problem to focus on when we’ve identified a wider range of customer problems to consider. Teresa Torres, creator of the Opportunity Solution Tree, calls these ”compare and contrast” decisions and ”whether or not” questions.

Using an Opportunity Solution Tree tool like Vistaly or Olta, teams can pick an opportunity or solution and add the underlying assumptions as a note on that node. Then, once they’ve picked which opportunity or solution they want to test, they have a paper trail of the assumptions made when that node was originally added.

Problem Assumptions vs Solution Assumptions

When most people think about assumption testing, they think about idea validation and proving that they’re solving a customer problem. But, as product management leader Marty Cagan says, “People don’t buy the problem, they buy your solution, so don’t spend a lot of time on the problem because you need as much time as possible to come up with the winning solution!”

This sounds like the opposite of most customer discovery advice, but there’s an important nuance in Marty’s words. When people validate a high-priority customer problem, they often assume this also validates their overall idea, when really all they’re doing is skipping all the assumptions they’ve made about their intended solution. This is another reason the Opportunity Solution Tree is so great. Splitting opportunities (customer needs/pains) from solutions encourages us to map our assumptions for each separately. If you’re not using the Opportunity Solution Tree, you can still add a step to your workflow to identify problem and solution assumptions separately to avoid skipping this step.

The Four Product Risks

Marty doesn’t just tell us to consider solution assumptions, he also gives us the framework to do it — The Four Big Product Risks:

A traditional product trio tends to divide ownership of each risk as follows:

• Product Manager → Valuable + Viable

• Product Designer → Usable

• Product Engineer → Feasible

As pointed out by Cesar Tapia, risks and assumptions are opposite sides of the same coin — risks hurt when they’re true, assumptions hurt when they’re false.

Planning Your Assumption Tests

The reason I like these frameworks is because they make it 10x easier to plan good assumption tests. For example, the Opportunity Solution Tree forces us to ask questions about the links between the company’s desired outcome, the problems customers face, and the solutions we think might solve those problems. This raises questions like, “Is this the only way a customer would try to solve this problem?”, “Is this solution too complex for the type of customer experiencing this need?”, and “Will this experiment give me a new or clearer perspective on the proposed solution?”.

The Four Big Risks gives even more targeted questions about our assumptions — especially those related to solution ideas. It encourages questions like “Do we have the time and resources to accomplish this proposed build?”, “Does this feature solve a problem that’s high enough on the customer’s list of priorities?,” and “Does this solution actually fit into the broader picture of how our company provides value to customers?”.

Just like in idea brainstorming, the more assumptions we can identify, the more likely we are to correctly diagnose which is the riskiest one in most need of testing.

How the best product teams TEST their assumptions

The best product teams run 10-20 experiments every week. If that number sounds absurd or unachievable, it’s because you’re still thinking about assumption testing the wrong way…

When it comes to assumption testing, it’s important to prevent unnecessarily expensive experiments — the type that take the most time to run, risk your credibility, or involve one-way decisions that can’t be undone. My guiding principle for experimentation is that the earlier you are in a project, the lower fidelity your experimentation method should be. As the level of certainty and conviction increases, the experiments move from ‘asking’ methods to ‘building’ methods.

Qualitative Research

1. User Interviews

The lowest-fidelity method of all is simply to ask customers questions that test your assumptions. Recommended resources: The Mom Test for Founders or Deploy Empathy for Product Managers.

2. User Feedback

Customer support, success and account management teams sit on a treasure trove of complaints, suggestions and feedback from customers. Some quick keyword searches can show you the right direction to start digging.

3. Ethnography

Ethnography means spending time with or observing people to learn about their lives, feelings, and habits. Observe customers attempting to complete a routine workflow or task using their existing solution (or your own product) — the more natural the situation, the better.

Quantitative Research

4. Scenario Testing

Scenario testing is a survey method that presents people with hypothetical choices and measures their preferences based which the decisions they make. Unlike rating-scale questions (eg. 1-5 stars), Scenario Tests force people to compare options directly, giving you better insight into how they might act in a real situation. Common formats include:

• Pairwise Comparison — breaks a list of options into a series of head-to-head “pair votes”, measures how often each option is selected, and ranks the list based on relative importance.

• Ranked Choice Voting — presents the full list of options to be ranked in order of preference.

• Points Allocation — gives people a pool of credits to allocate amongst the available options however they see fit so as to measure the magnitude of their preferences.

OpinionX is a free research tool for creating scenario-based surveys for assumption testing using any of the formats mentioned above. It’s free, comes with analysis features like comparing results by customer segment, and is used by thousands of product teams at companies like Google, Shopify, LinkedIn and more.

All of the above Scenario Testing formats are available for free on OpinionX

5. Paraphrase Testing

Paraphrase testing helps you figure out if people are interpreting a statement as you expected. No fancy equipment required — just show the statement alongside an open-response text box and ask people to write what it means to them. You can either count keywords or thematically analyze the answers.

6. Quant Survey

I tend to opt for Scenario Testing if I’m going to be running a survey-based assumption test, however, if your assumption relates to an objective fact (like how much time someone spends on a given task per week or the financial cost a problem represents to their team) then a traditional quantitative survey can be great. Here’s a guide explaining best practice and common pitfalls when using quant surveys.

Demand Testing

7. Ad Testing

Online ads be used to source participants for your assumption test. DoorDash used AdWords to test whether anyone was searching for food delivery in Palo Alto. You can use Instagram ads with variations of the same image/text and see which gets the highest click-through rate. At Unilever, we used Facebook ads with concept product images that directed people to short surveys, telling us click-through rates as well as their qualitative impressions and customer comprehension of the concepts.

8. Dry Wallet

Dry Wallet tests lead customers through a checkout experience to a dead end like an “Out of Stock” message. These tests let customers prove their intention to buy without you having to invest in the product upfront. Dry Wallet tests are particularly common among ecommerce companies.

Groupon — the founders created a blog with fake deals and offers to see if people would sign up for the deals. They didn’t build the product until they proved this demand.

Buffer — The social media scheduling tool created a landing page and set of pricing plans before ever building the actual product. If you clicked through one of the pricing plans, you were told the product wasn’t ready yet and shown an email sign-up form to be notified about the future launch.

9. Fake Door

Fake Door tests are like Dry Wallet tests but focused on a specific feature rather than mock purchasing a new product entirely. Dropbox created a landing page video using paper cutouts to explain the original product concept. Founder Drew Houston later said that “[the video] drove hundreds of thousands of people to the website. Our beta waiting list went from 5,000 people to 75,000 people literally overnight. It totally blew us away.”

Prototype Testing

10. Impersonator

The Product Impersonator test uses competitor components to deliver the intended product/service without the customer knowing. Product Loops offers two great examples:

Zappos — initially purchased shoes from local retailers as orders came in instead of buying inventory upfront.

Tesla — in 2003 (pre-Elon Musk), Tesla created a prototype fully-electric roadster using a heavily modified, non-functional Lotus Elise to demonstrate to prospective investors and buyers what the final design might look like.

11. Wizard of Oz

A quick way to test an assumption is to offer functionality or services to customers without actually building the process for delivering them. If the customer requests the service or pays upfront for it, you scramble to facilitate the requirements manually behind the scenes. Examples:

DoorDash — the team had no delivery drivers or system in place to process orders when they first launched; they just sent whoever on the founding team was closest to the restaurant to go pick the food up when an order was received.

Anchor — “We hired a couple of college interns, we said to them that people are going to push this magical button and say “I want to distribute my podcast” and your job is to do all that manually but to them it’s going to feel magical like it happened automatically. We just had college students submitting hundreds of thousands of podcasts.” (Quote from Maya Prohovnik, VP of Product)

The video below talks about building ‘Wizard of Oz’ features to close enterprise deals, like a “Generate Report” button that shows a “Report will take 48-72 hours to compile” notification to the user while the report is manually created by an employee.

In episode 679, join @RobWalling for a solo adventure where he answers listener questions about enabling “mock features” for closing big sales, phased launches and recovering from failed launches, content marketing for SaaS apps, consulting, and more.https://t.co/o4FYg0hhGE pic.twitter.com/Wpq64rACIE

— Startups For the Rest of Us (@startupspod) September 19, 2023

12. Wireframe Testing

The most common wireframe testing methods are usability tests (asking users to complete tasks using interactive prototypes) and five-second test (showing an image or video of the prototype and asking users to explain what they see), a first-click test, or image-based pair voting (showing two images at a time to measure preferences).

Vanta — the first version of Vanta was just a spreadsheet they shared with new customers, testing whether a templated approach to SOC 2 applications could be done. As Vanta’s founder Christina Cacioppo explains, “We started with really open-ended questions, then moved to spreadsheet prototypes, and then moved to prototypes generated with code. At the end of the six months, we started coding.”

Product Analytics

13. A/B Testing

A/B testing is a common type of variable test that splits your users into two groups who are shown two different versions of the product and tracks the differences in their usage. A/B tests are most commonly used for optimization work (finding ways to improve existing functionality) but some companies (like Spotify) often use it to test the impact of new functionality too.

A/B testing requires large sample sizes to be statistically significant, which limits its use mostly to large companies. “Unless you have at least tens of thousands of users, the [A/B test] statistics just don't work out for most of the metrics that you're interested in. A retail site trying to detect changes that are at least 5% beneficial, you need something like 200,000 users.” — Ronny Kohavi (VP at Airbnb, Microsoft, Amazon) on Lenny’s Podcast.

A/B testing uses the same principle as pairwise comparison (covered under Method #4, Scenario Testing), except in pairwise comparison surveys, users are shown statements/images instead of the actual product experience.

14. Feature Adoption Rate

Most product analytics tools can track feature adoption rate. With these tools, you can ship a V1 feature and measure how many users try it as a gauge for overall interest. This is similar to how we launched our first needs-based segmentation feature for OpinionX — initially, we shipped a really simple segmentation filter that only worked on the answers to one individual question. Customers quickly asked us to expand the feature to segment more types of data simultaneously and to compare and correlate different customer segments.

15. “Ship It And See”

This is not an assumption test — it’s an “everything” test. Be prepared for a hard fall if you’ve jumped straight to shipping your idea without any experimentation, research, or assumption testing along the way…

Source: @adhamdannaway on Twitter/X

Defining Success & Failure Upfront

Defining conditions for success before conducting an assumption test reduces confirmation bias and (even more importantly) avoids teams disagreeing over the meaning of the results.

Clear Purpose

The only way to define success for an assumption test is to first understand why you’re conducting this test in the first place.

The Opportunity Solution Tree excels here because its tree-based structure links everything together, and the “Four Big Risks” framework helps you articulate the specific aspect that’s riskiest. For example, if your assumption test is for a new feature, then you know what customer need/pain that solution must address (OST) and which aspect of the solution you’re most uncertain of (4BR).

Example 1: Vanta

Here’s a great example of assumption testing in action from Vanta’s founder Christina Cacioppo, taken from a recent profile by First Round Review:

In their first experiment, they went to Segment, a customer data platform, and interviewed its team to determine what the company’s SOC 2 should look like and how far away it was from getting it. “We made them a gap assessment in a spreadsheet that was very custom to them and they could plan a roadmap against it if they wanted,” she says.

Cacioppo was running a test to answer two key questions:

Could her team deliver something that was credible?

Would Segment think that it was credible? The answer to both questions ended up being “yes.” And thus, the first (low-tech) version of Vanta was born — as a spreadsheet. “It actually went quite well, so we moved on to a second company, a customer operations platform called Front,” she says.

For this experiment, Cacioppo wanted to test a new hypothesis: Could she give Front Segment’s gap assessment but not tell them it was Segment’s? Would they notice?

“We used the same controls, the same rules and best practices, and still interviewed the Front team to see where Front was in their SOC 2 journey, so it was customized in that sense,” she says. “But this test was pushing on the 'Can we productize it? Can we standardize this set of things?' And most importantly, ‘Can they tell this spreadsheet was initially made for another company?’”

They couldn’t. And then, Cacioppo got an email that sealed the deal in terms of validating her idea.

I had to block quote this whole section because it was already perfect. Christina outlines an initial assumption (Can Vanta credibly provide SOC 2 compliance as a service), tests that, updates her riskiest assumption (the application process for SOC 2 compliance could be standardized), and tests that again. These assumptions are so clearly articulated that the outcome was an obvious success/failure.

Example 2: OpinionX

6 months after launching OpinionX, we lost our one and only paying customer. Having conducted 150+ interviews up to that point, we were sure that our key problem statement — “it’s hard to discover users’ unmet needs” — was the right pain point to focus on. But losing our only customer made us question whether we had really tested this assumption…

We decided to run a quick test, where we compiled a list of 45 problem statements and shared them with 600 target customers (of whom 150 people agreed to help). Each person was shown 10 pairs of problem statements and asked to pick the one that was a bigger pain for them. Using that data, we ranked the statements from most important to least important problem. And guess what? Our key problem statement was DEAD LAST.

We had spent over a year building a product that solved the wrong problem all because we had never tested whether the problem was a high priority for customers to solve. When we interviewed participants from this ranking test, we learned that they didn’t actually struggle to discover unmet customer needs — on the contrary, many teams felt like were drowning in unmet needs and couldn’t figure out which ones to prioritize! We pivoted the product away from problem discovery to problem ranking. One week later, we had 4 paying customers. More on this story here or in the video below:

Comparing Segments

Teams often fail to account for how customer segments influence the results of their assumption tests. Here’s a 30-second example I made that shows how an assumption test can look like a failure at an aggregate level while also showing the opposite result when you isolate a specific customer segment:

To account for segment differences, it’s important to ask whether you’ve baked in any assumptions about customer segments into the test you’re designing. If so, you need a way to filter and/or compare results by customer segments. If that’s not possible, you should find ways to isolate segments (eg. creating identical but separate tests for each key segment) or test your segment hypothesis first.

One final tip is the difference between top-down and bottom-up customer segmentation.

If we’re creating an assumption test to measure feature importance, we can assume that our results will vary significantly depending on the pricing plan each customer is on. In top-down segmentation, you define your key segments upfront.

Imagine you’re running a research project where customers rank problem statements to help you understand which is their highest priority to solve. There are a lot of data points that could impact the results — from company size and industry to seniority and job title. In cases like these, we want to collect enough data across different categories (or enrich our results with existing customer data) so that we can identify the segments that impact the results most afterward. This is bottom-up segmentation, where you let the data tell you which segments that impact your results most. More info on bottom-up segmentation here.

Taken from my guide to needs-based segmentation

Conclusion: Test Your Assumptions!

No matter how strong our product sense is or how close we stay to our customers, many of our assumptions will prove to be incorrect. Learning how to identify the riskiest assumption in our ideas, rapidly and iteratively test those assumptions, and update our view of the customer or market, is a fundamental skill for product teams. I hope this guide has given you clear next steps to help you work on your assumption testing skills!

OpinionX is a free research tool for ranking people’s priorities. Thousands of teams from companies like Google, Shopify and LinkedIn use OpinionX to test assumptions about their customers’ preferences using choice-based ranking surveys so they can inform their roadmap, strategy and prioritization decisions with better data.

Sign up to OpinionX for free to create your own assumption test

If you enjoyed this guide, subscribe to our newsletter The Full-Stack Researcher, where we share user research advice with thousands of product managers and founders: