Ultimate Guide to MaxDiff Analysis: Examples, Methods, Tools

What is MaxDiff Analysis?

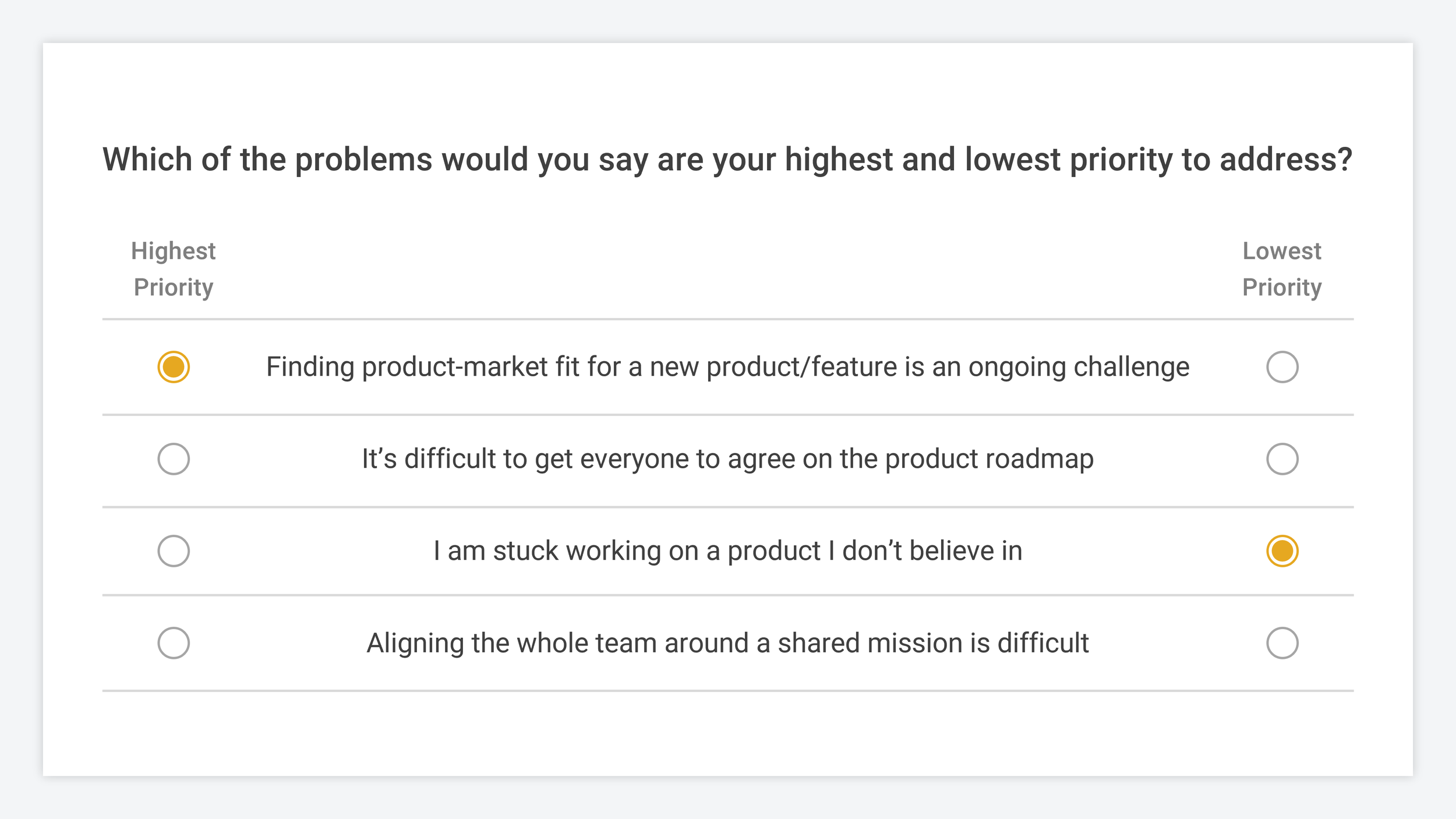

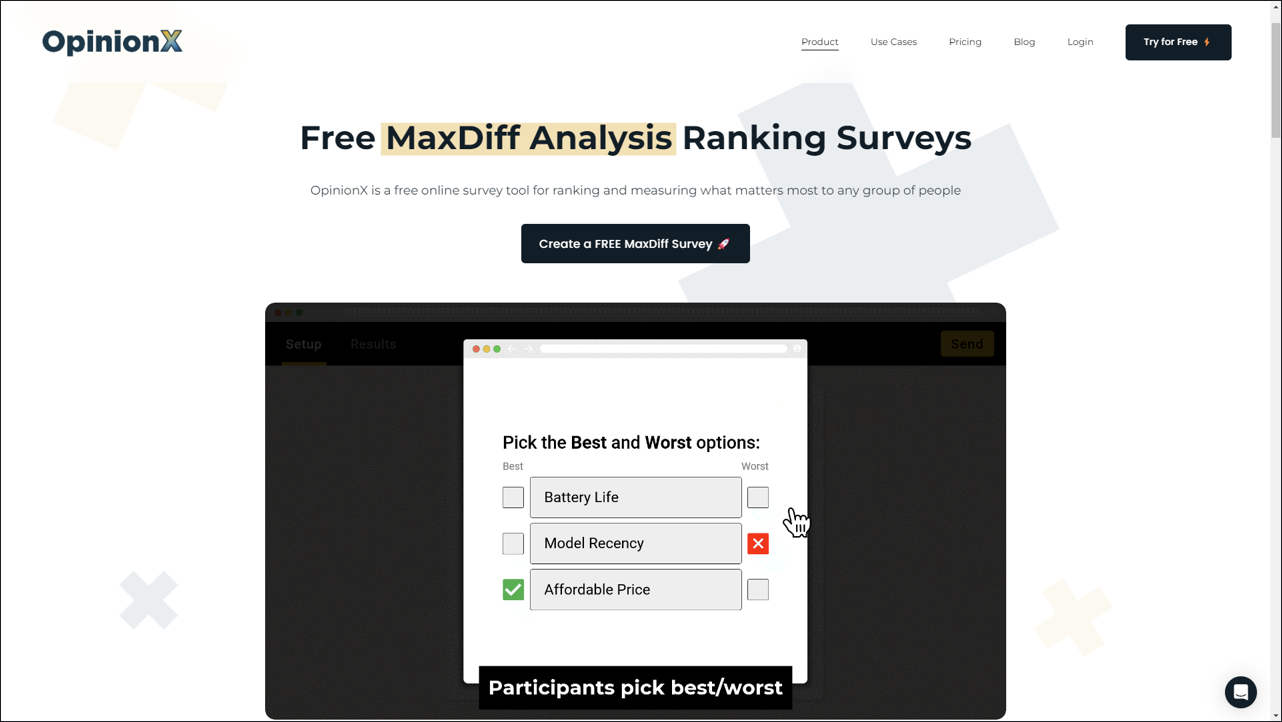

MaxDiff Analysis is a method for ranking people’s preferences by asking them multiple times to choose the best and worst option from a group of statements. Typically only 3-6 options are shown at a time, although you can show more than 6 if required. Each time the respondent votes, a new set of statements from the overall list of ranking options is shown. The “best” and “worst” labels in a MaxDiff survey can be changed to suit your research occasion, such as Favorite / Least Favorite, Top / Bottom, or Most Preferred / Least Preferred.

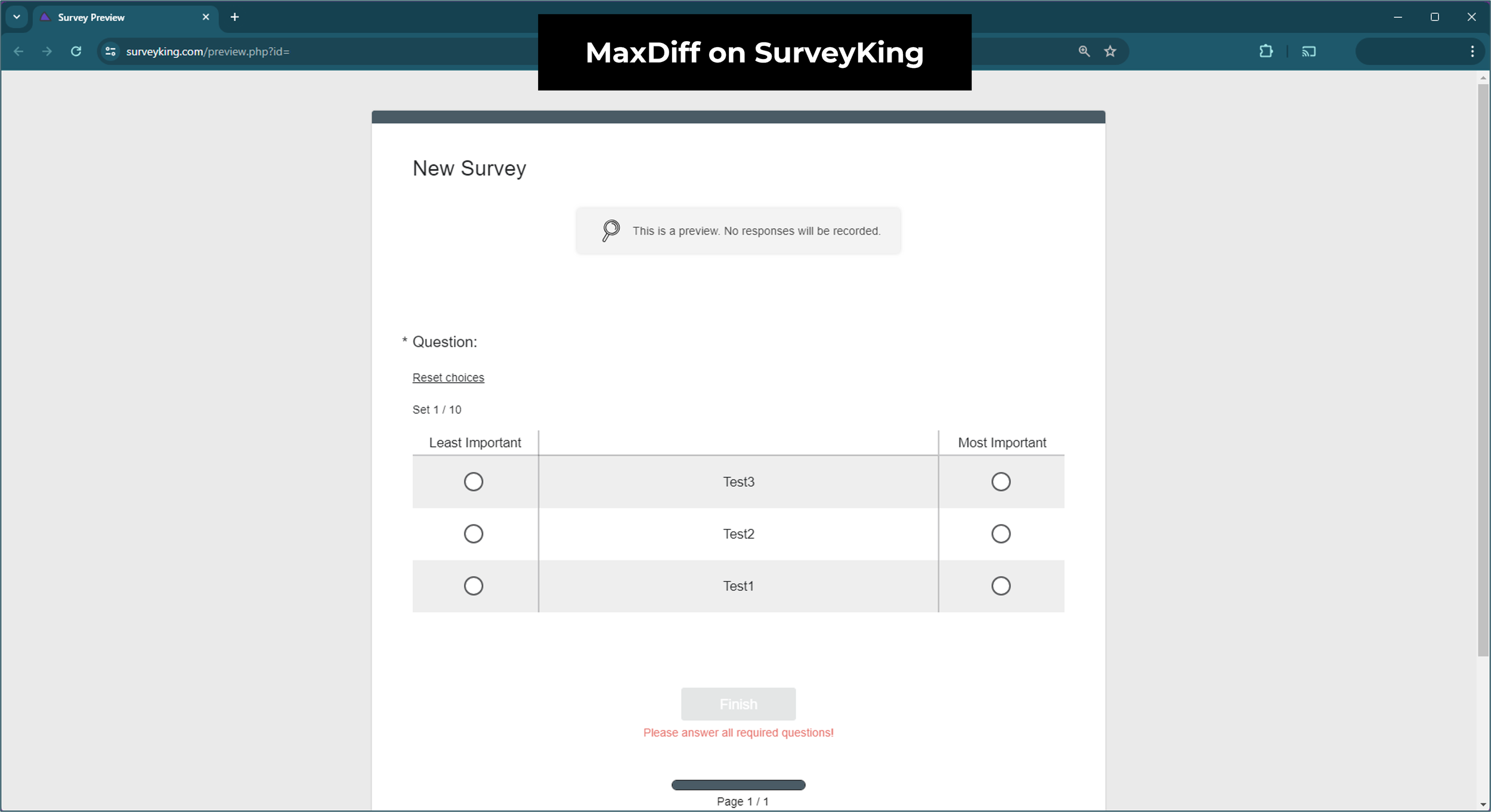

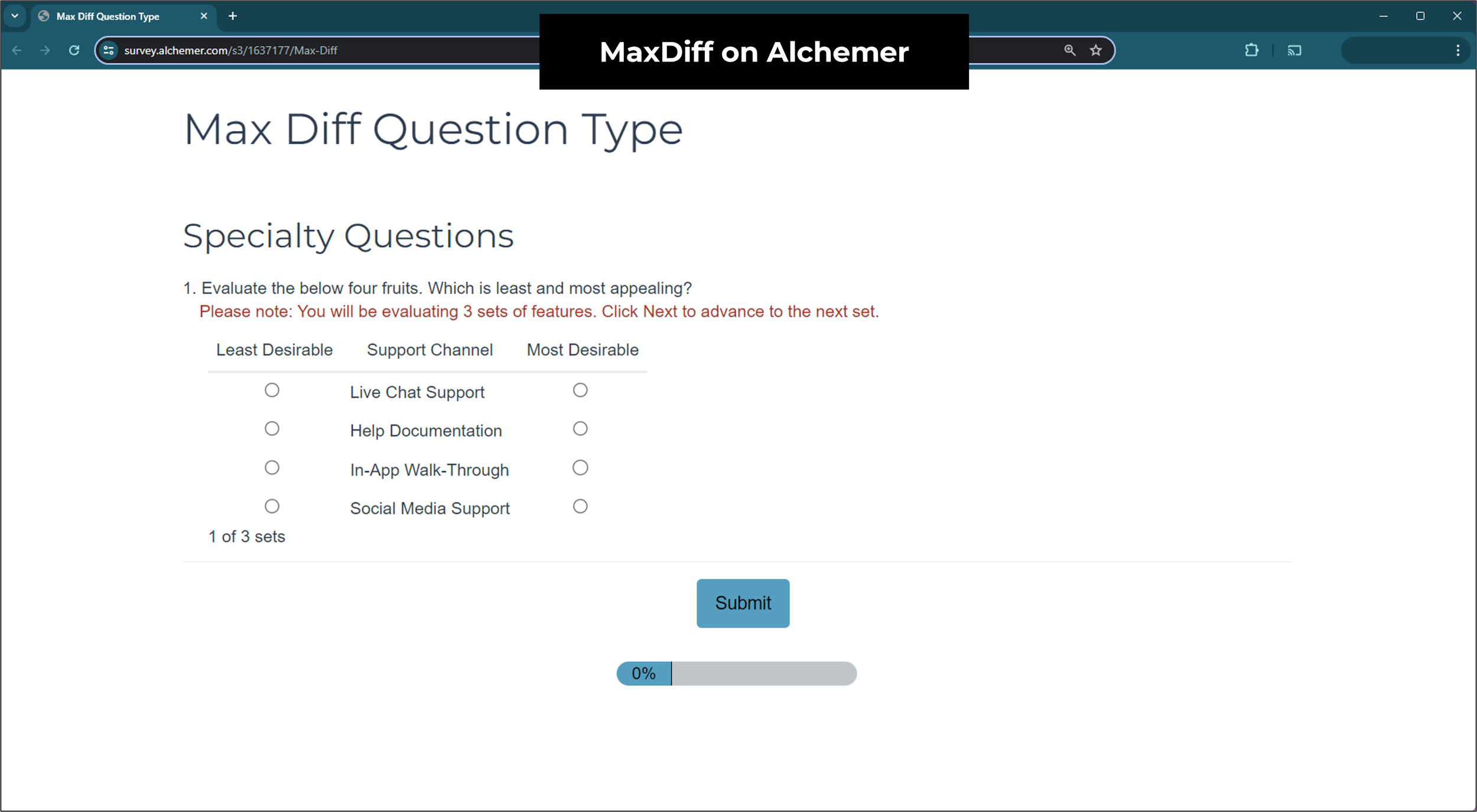

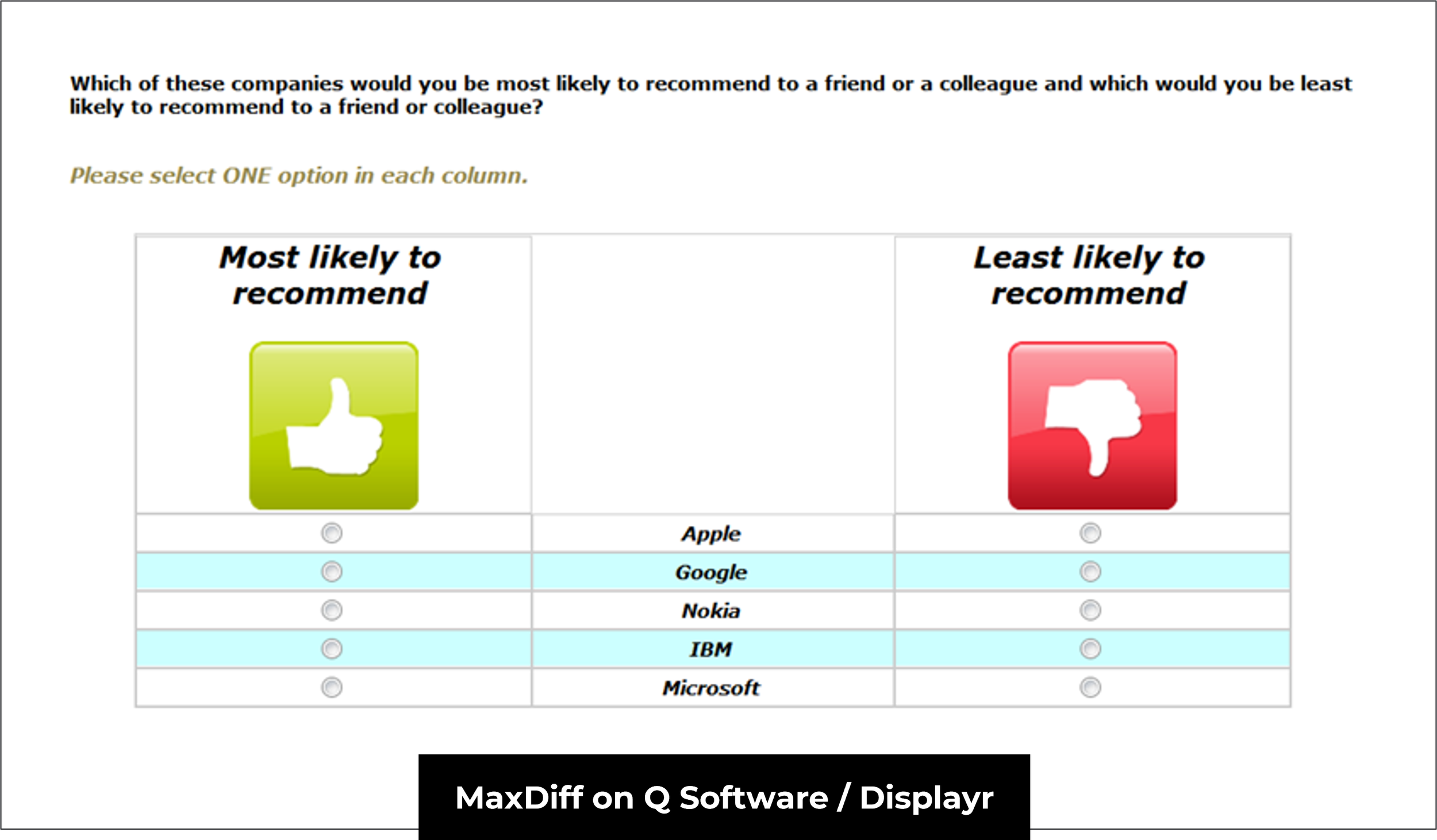

A typical MaxDiff question with a set of written options and radio buttons / checkboxes representing “Best” and “Worst” on each side.

Interactive MaxDiff Analysis Example Survey

The best way to understand how Max Diff surveys work is to try one for yourself! Here’s an example of a MaxDiff questionnaire being used to rank a list of colors from most to least preferred. You’ll be shown 7 voting sets, after which you can see the overall results:

⬆️ TRY IT FOR YOURSELF! ⬆️

How is MaxDiff different from other types of survey questions?

MaxDiff is a comparative ranking method — it forces respondents to compare and choose between options according to their personal preferences. This means that MaxDiff creates “continuous” data where answers are all plotted from highest to lowest on a full range of scores.

On the other hand, rating questions (eg. a scale with 5 stars) produce “discrete” data, which means that responses are pooled around fixed numbers like 3/5 stars, 4/5 stars, etc. This makes rating scale questions bad for researchers who are trying to compare and rank things because participants can answer rating scale questions like this:

^ This is exactly why researchers go for forced comparison methods like MaxDiff instead of rating-based questions like Likert Scales — it’s much better at mapping the minor differences in people’s preferences even when they like all the options.

The gaps between people’s preferences are the most important output of ranked results. If we use rating questions, we lose all this rich insight — people’s top priorities all get lumped together under the “5/5 star” response. To avoid this, we want to make sure we’re using research formats that force participants to compare options against each other, such as MaxDiff Analysis.

When is Max-Diff Research used?

MaxDiff measures people’s priorities, which means it is well suited for a bunch of different research scenarios such as…

1. Prioritization: Ranking problem statements to figure out which pain point has the largest negative impact on your key customers or which feature they would most like to see built.

2. Sales / Marketing Research: Comparing messaging ideas or product claims to see which resonates best with your target audience.

3. Pricing Research: Identifying which features deliver the most value to customers on a specific pricing tier so that you can improve feature discovery or upgrade messaging (real-life example of this).

4. Group Voting: Quickly measuring the preferences or priorities in a room helps make group situations like workshops or team meetings more participatory. MaxDiff is particularly useful when you’re trying to rank subjective opinions or a long list of options.

5. Customer Segmentation: Because MaxDiff measures each person’s preferences, it produces a great data set that you can use to compare the differences in what people from different segments care about (ie. needs-based segmentation).

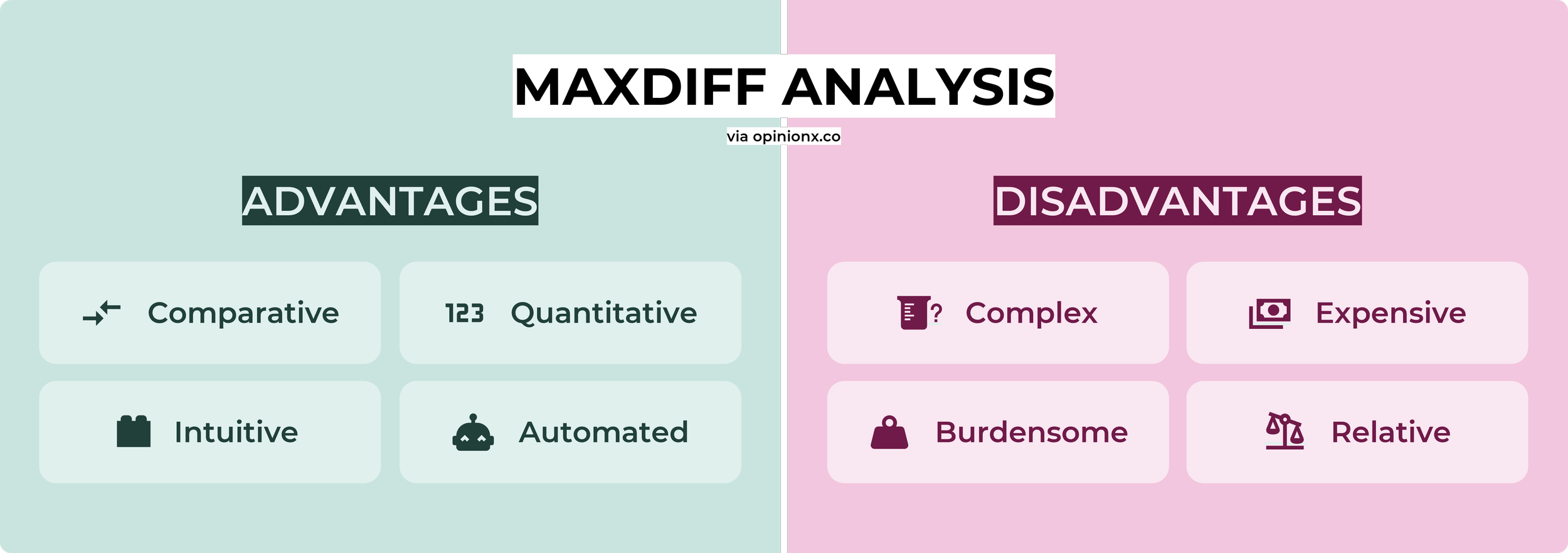

What are the advantages of MaxDiff Analysis?

1. Comparative: By forcing people to compare options, MaxDiff helps you translate your preferences or opinions into a ranked list without having to put everything manually in order.

2. Quantitative: MaxDiff Analysis is a great way to turn text and images into numerical statistics. Most companies tend to value quantitative data over opinions and quotes, so MaxDiff is ideal when planning and informing big decisions.

3. Intuitive: MaxDiff is so simple that a 6-year-old could complete it — just pick the best and worst options from a set of 3-6 choices. It also works just as easy on mobile and tablet as it does on desktop. Manually ranking or ratings a long list places a much higher cognitive load on respondents.

4. Automated: The structured nature of voting means that collecting and analyzing MaxDiff data is just as easy with 10 respondents as it is with 10,000, allowing you to run MaxDiff surveys at scale and get better data for advanced analysis like customer segmentation.

What are the disadvantages of MaxDiff Analysis?

1. Complex: Some products use advanced scoring methods such as linear regression or Bayesian rating systems to translate MaxDiff votes into statistics that can be difficult to decipher for most people. I prefer a simple formula like (best-worst)/appearances that gives each option a score from -100 to +100, which is a lot easier to explain (and defend if someone challenges your results!). This simple formula is how the MaxDiff question type “Best/Worst Rank” works on OpinionX surveys.

2. Expensive: Most research tools consider MaxDiff to be an advanced research method — for example, SurveyMonkey charges $2700 to access their MaxDiff question (8x higher than their entry-level price). OpinionX offers a free MaxDiff survey tool where all survey methods and analysis features are unlocked so you can test them before making any commitments.

3. Burdensome: While MaxDiff may be intuitive, it can become cognitively burdensome if you show too many options at a time. The general rule of thumb is 3-6 options at most. If in doubt, you can switch to Pairwise Comparison which only shows two ranking options at a time.

4. Relative: Like all discrete-choice analysis methods, MaxDiff scores the preferences of items relative to each other. This may tell us that one option is better than the others in your list, but it won’t tell us if our overall list of options is a good or bad batch from an absolute perspective. You should always do qualitative research beforehand to ensure your voting list includes a sufficient range of options.

What’s the difference between MaxDiff Analysis and Best-Worst Scaling?

MaxDiff Analysis and Best-Worst Scaling are not the same thing (this is a common misconception about MaxDiff Analysis that people have been spreading online for decades).

MaxDiff Analysis describes the data collection method itself, which requires respondents to pick the two options with the greatest difference in preference or importance. Best-Worst Scaling describes output data that is sorted on a scale from best to worst, which can be done using a range of choice-based comparison methods, only one of which is MaxDiff Analysis. Best-Worst Scaling can also be achieved using Pairwise Comparison, Ranked Choice Voting, or Conjoint Analysis, for example.

While many sources disagree on this distinction (even ChatGPT says they’re the same thing), within academia the definitions for both terms have been correctly differentiated in this manner since 2005 onwards. However, outside of academia, the two terms tend to be used interchangeably (even on OpinionX surveys, the MaxDiff Analysis question type is called “Best/Worst Rank”), so don’t sweat the difference here too much :)

How are MaxDiff Analysis results calculated? How does MaxDiff methodology work?

While some tools offering MaxDiff Analysis surveys use advanced algorithms like Bayesian statistics models or linear regression, I’ll stick to the more common aggregate-scoring method for this explanation.

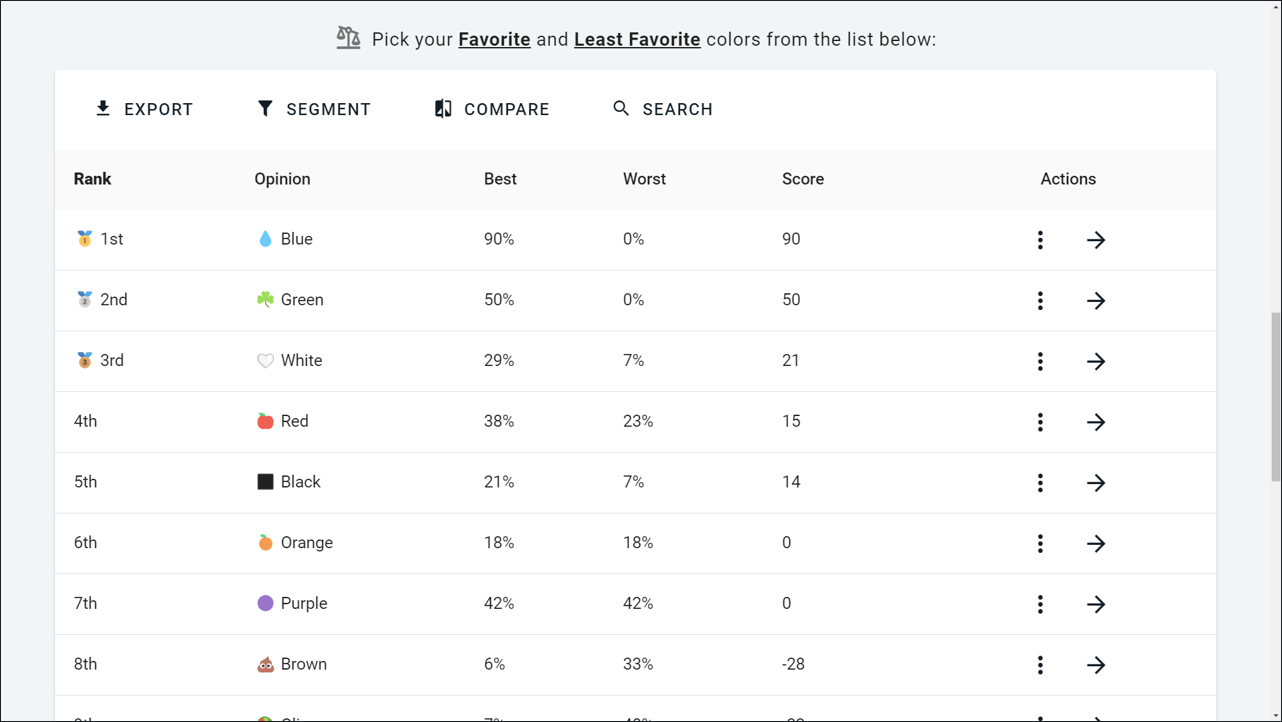

The Aggregate-Scoring Method for MaxDiff Analysis takes the total number of “best” votes, subtracts from it the total number of “worst” votes, and divides the result by the total number of times the option appeared to participants — ie (best-worst)/appearances. For example, if the option appeared in 100 voting sets where it was picked as the best 50 times and the worst 15 times, it would be (50-15)/100 = 35%. Here’s an example of what results look like on an OpinionX MaxDiff survey:

Screenshot of the results table on a Best/Worst Rank survey hosted (via OpinionX)

How do you calculate a target sample size for your MaxDiff project?

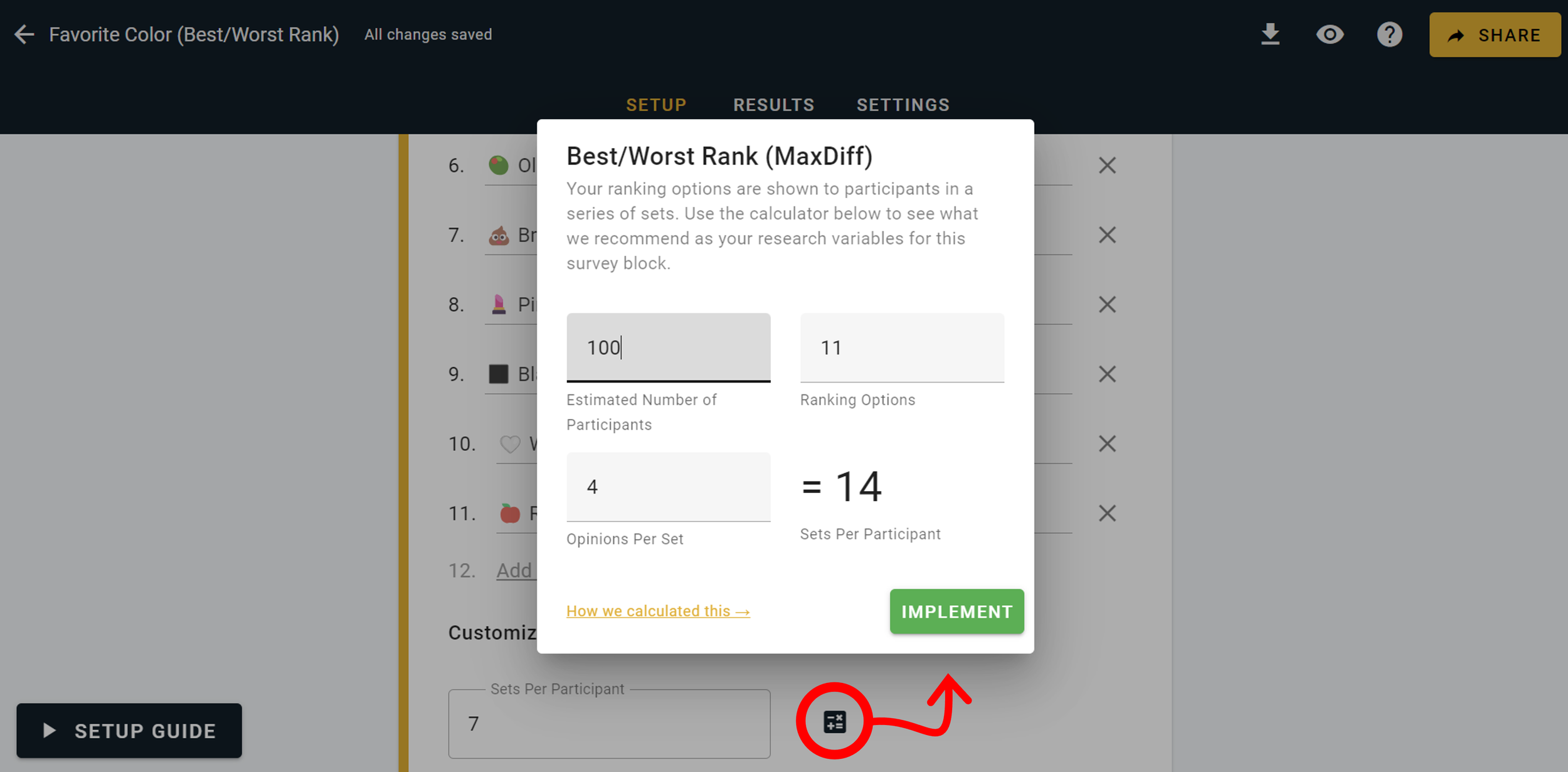

Most guides to MaxDiff Analysis will give you an arbitrary minimum of 100 participants to generate a robust sample size, but this is complete nonsense. The only way to determine the right target sample size is based on the configuration of your MaxDiff survey. Thankfully OpinionX comes with a built-in calculator to help you find the perfect configuration so that your survey produces a robust result — here’s how that calculator works:

Every MaxDiff survey is made up of the same set of variables:

x= total number of ranking options in your survey (x = 11 in the screenshot above)n= number of options per set (ie. how many options are shown in each comparison vote)p= number of participants that will complete your survey (your minimum estimate)s= number of sets per participant (ie. how many times they'll have to pick Best/Worst)r= robustness variable that ensures each option will appear in at least r comparisons total

Together, these variables combine as the following formula → rx/np=sr variable to at least 200 — ie. every option should appear for voting at least 200 times during your survey. Otherwise, the other variables are all dependent on how you choose to build your own survey. The formula output, s (sets per participant), is the easiest variable you can alter to control the robustness of your results.

So if we have a survey with 30 ranking options (x), where 4 options are shown in each set (n) and I expect to be able to get at least 80 people to complete my survey (p), then I can use the formula to calculate how many sets to show to each participant → 200(30)/4(80) = 18.75. For these robustness calculations, if you ever get a decimal output, it’s a good principle to always round up! In this case, the number of sets per participant (s) is 19.

Some MaxDiff Calculation Caveats:

1. Generally, if the formula results in s < 10, I would still show 10 sets to each participant — MaxDiff voting takes just a few seconds for each set, so gathering extra data can only be a good thing (as long as the rest of your survey is not too long; see point 3).

2. You can also use this robustness formula to calculate other variables as the key output, not just the number of sets per participant. For example, I could use the formula to figure out the number of participants to recruit by moving the variables around like this → p=rx/sn3. If your survey has multiple ranking questions, you should consider the total number of votes a participant will have to cast across your entire survey when deciding on the number of sets per participant (s) for your MaxDiff block. The higher your total number of sets across the whole survey, the lower your completion rate will be — generally, anything above 40 sets within a survey is a lot to expect of any participant (unless you provide a strong incentive like a financial reward).

4. If segmentation is going to be an important part of your analysis, you should substitute the "participants" variable for your estimate of the total number of people you expect to reach from your smallest key segment.

Comparing The 10 Most Popular Tools For MaxDiff Analysis Surveys

For each of the tools in this list, I considered the following criteria:

1. Is there a free version available?

2. Can I try it myself without having to talk to a sales team?

3. Is the survey well designed / usable and are there sufficient analysis features?

As you’ll see, all but one of the tools turned out to be paid-only and for some I couldn’t even find proof that they really have a MaxDiff format at all…

1. OpinionX ✅

OpinionX is a free survey tool for ranking people’s priorities. It comes with a MaxDiff Analysis question type called “Best/Worst Rank”, which you can use on the free version of the tool. All survey methods and analysis features are unlocked on the free plan so you can test them, if you need to collect more responses, you can purchase a paid plan (e.g., the Analyze tier with up to 1 000 participants per survey or the Accelerate tier for unlimited participants).

Here’s a full breakdown of OpinionX’s free and paid plans:

OpinionX Free: The free version allows you to choose how many sets each participant must vote on (it comes with a built-in robustness calculator to help you figure out the right number for this, depending on the expected amount of participants). OpinionX’s free tier lets you create unlimited surveys with unlimited questions and teammate seats, and you can test each survey with up to 10 participants before committing to a paid plan.

OpinionX Analyze: Continue enjoying everything on the free plan, while unlocking up to 1,000 participants per survey. Price: $900/year.

OpinionX is used by tens of thousands of teams around the world, including researchers at companies like Google, Amazon and Microsoft, as well as national governments and Ivy League academics. OpinionX’s paid subscriptions for MaxDiff are more affordable than any other tool on this list.

— — —

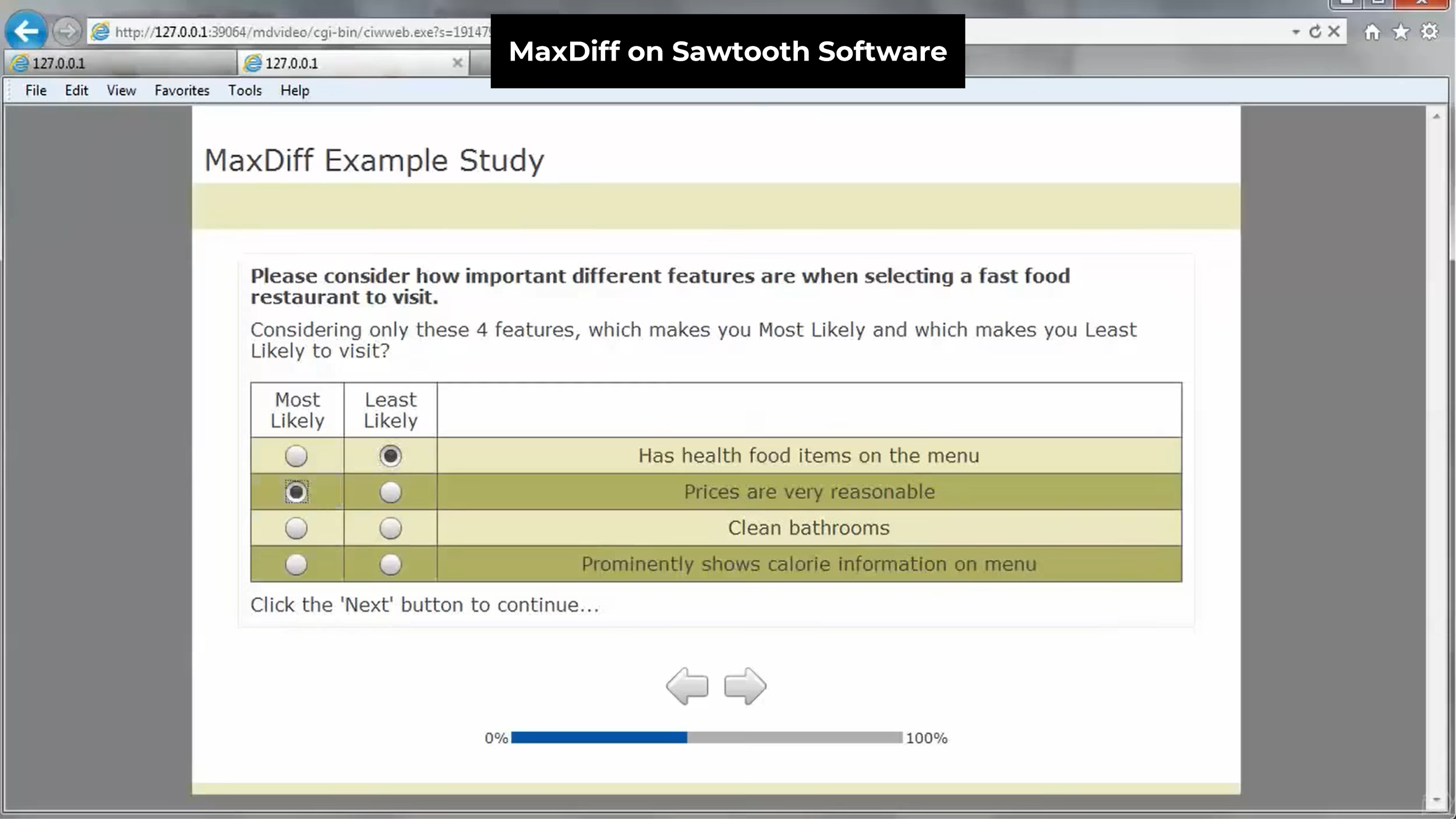

2. Sawtooth Software ❌

Sawtooth Software is a provider of digital market research solutions, with a specific focus on conjoint analysis solutions. Founded in 1983, the company focuses on academics and market research organizations and offers research expertise as an additional available service.

Sawtooth created a proprietary version of MaxDiff Analysis which they call “Bandit MaxDiffs”. This novel approach leverages Thompson Sampling to algorithmically adapt its selection of the most informative choice sets for each subsequent participant comparison.

Sawtooth Software starts at $4,500/year for their “Basic” package and $15,000/year for the full package. There is no free or demo version of their MaxDiff tool. You can request a demo of their Lighthouse Studio product (a Windows-only desktop application) from their sales team if you want to learn more.

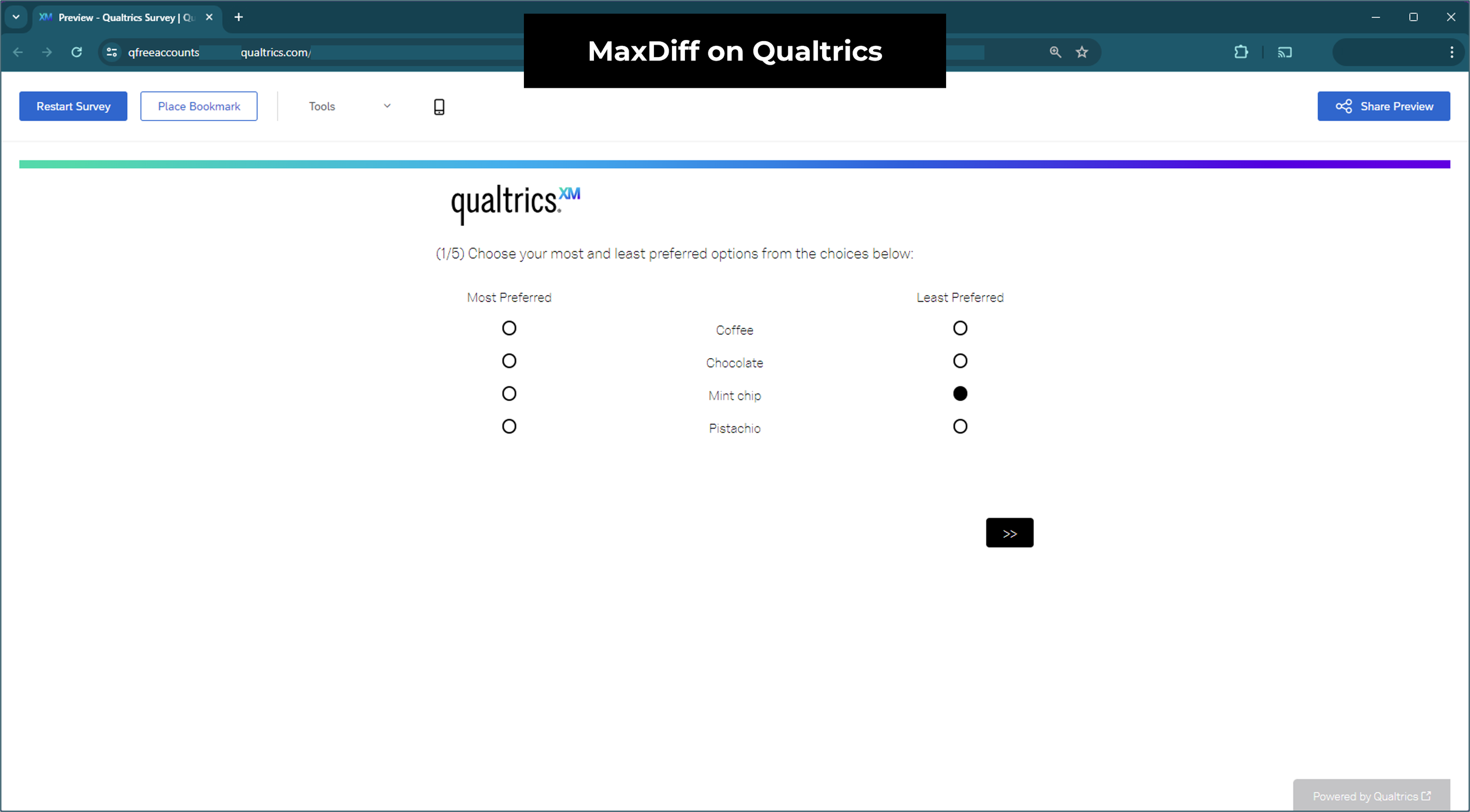

3. Qualtrics ❌

Qualtrics has a MaxDiff question format but it is not available for use without an existing PX or EX premium subscription or if you have a CX license that includes the journey optimizer. Even with that, you’ll still need to make an additional purchase via your Account Executive to unlock MaxDiff on Qualtrics.

Considering a base-level CX license costs $5,000/year for one CoreXM user, plus $1 per respondent, and Conjoint starts at an additional $8,000/year add-on, it would appear that MaxDiff on Qualtrics would be a $10,000 entry price plus respondent fees for a new customer.

I managed to get access to a test version of MaxDiff Analysis on Qualtrics, which shows a dummy survey that is already filled in with sample data (screenshot above). The dummy survey seems to show that the MaxDiff voting sets are fixed and consistent for every participant, which is weird and not so useful for almost any MaxDiff research project.

4. SurveyMonkey ❌

SurveyMonkey added a MaxDiff question type in October 2023 called “Best-Worst Scale”. Their pricing page shows that it is available on all paid plans, but via a friend’s premium account I was shown that it is only available if I upgrade to Team Premier (the most expensive plan) starting from $2700/year ($75/month x 3-seats minimum x 12-month upfront only). Hard to know which is true, as the information about this feature from SurveyMonkey is contradictory and inconsistent.

Unfortunately, after searching high and low (pun intended) for literally a single example of someone even mentioning it online, I could not find a single screenshot of what their “Best-Worst Scale” actually looks like. Based on an obscure Reddit comment I found, it seems like maybe one person used it in December 2023, but that survey has since been closed so I couldn’t take a look for myself.

Honestly it seems suspicious that not a single person has mentioned this on the internet other than the press release announcing its launch — based on SurveyMonkey’s usage stats 4.2 billion questions have been answered since then and somehow nobody has screenshot or posted anything about this??? SurveyMonkey doesn’t even have any screenshots on their own help center article about this question type, they decided to use text tables instead (screenshot above).

So very weird… My guess, based on the original press release, is that this is a feature of their Market Research Enterprise Solutions range, which would definitely cost a lot more than the stated $2700/year price tag. Hopefully someone can confirm to me whether this actually exists on the self-service product and isn’t just some sort of fake-door test from the PM team there?

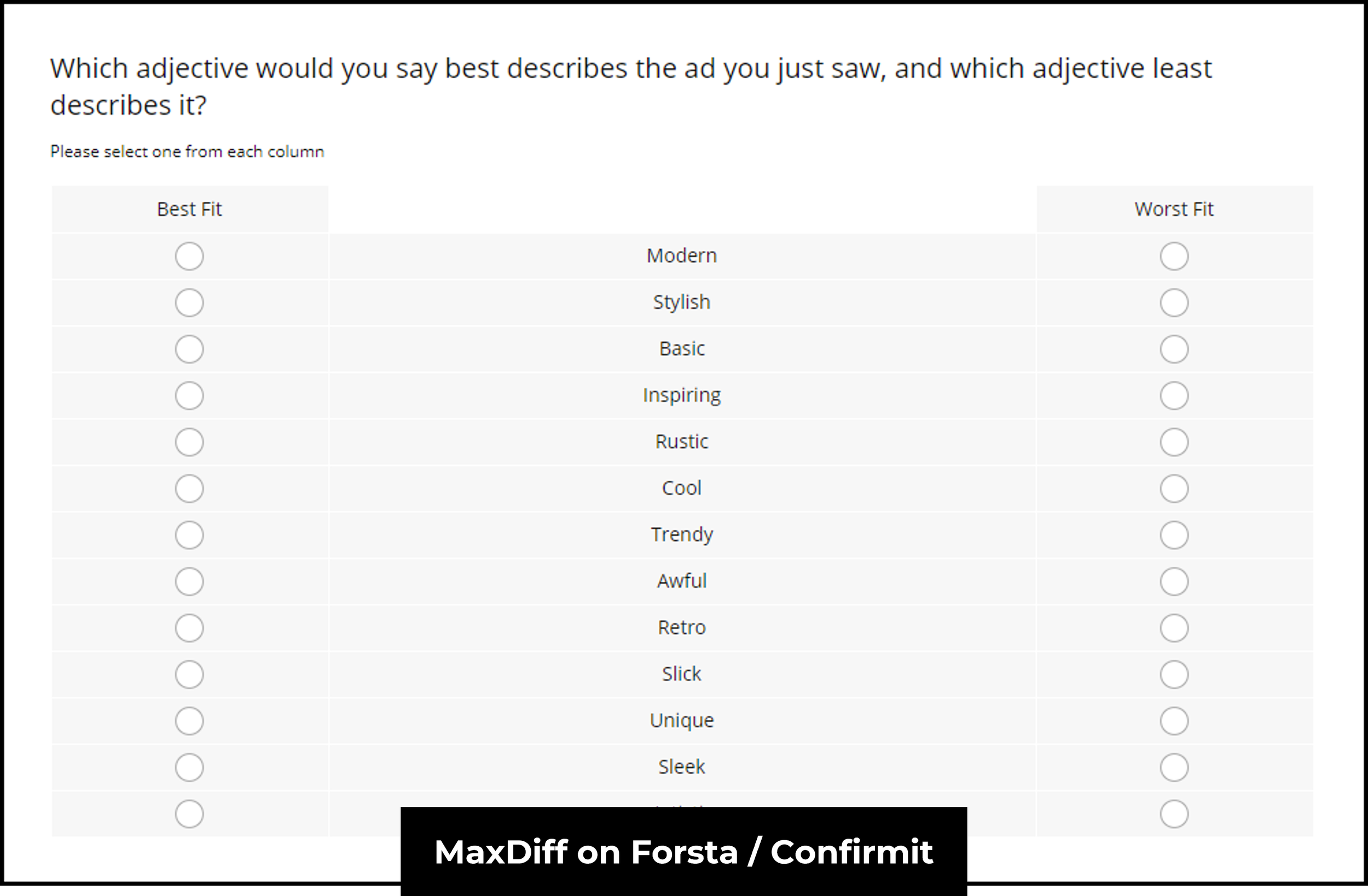

5. Forsta ❌

Forsta (the brand that emerged from the mega-merger between Confirmit, FocusVision Decipher, and Dapresy) offers a MaxDiff Analysis survey format as part of their “dynamic questions” range. I turned over every rock on the internet to find (1) a live demo or (b) a rough price range for Forsta but I couldn’t find anything in the end. Seems like you’ll have to go through a full sales qualification conversation if you want to find this out for yourselves (🚩), good luck to the brave souls willing to go through that 🫡

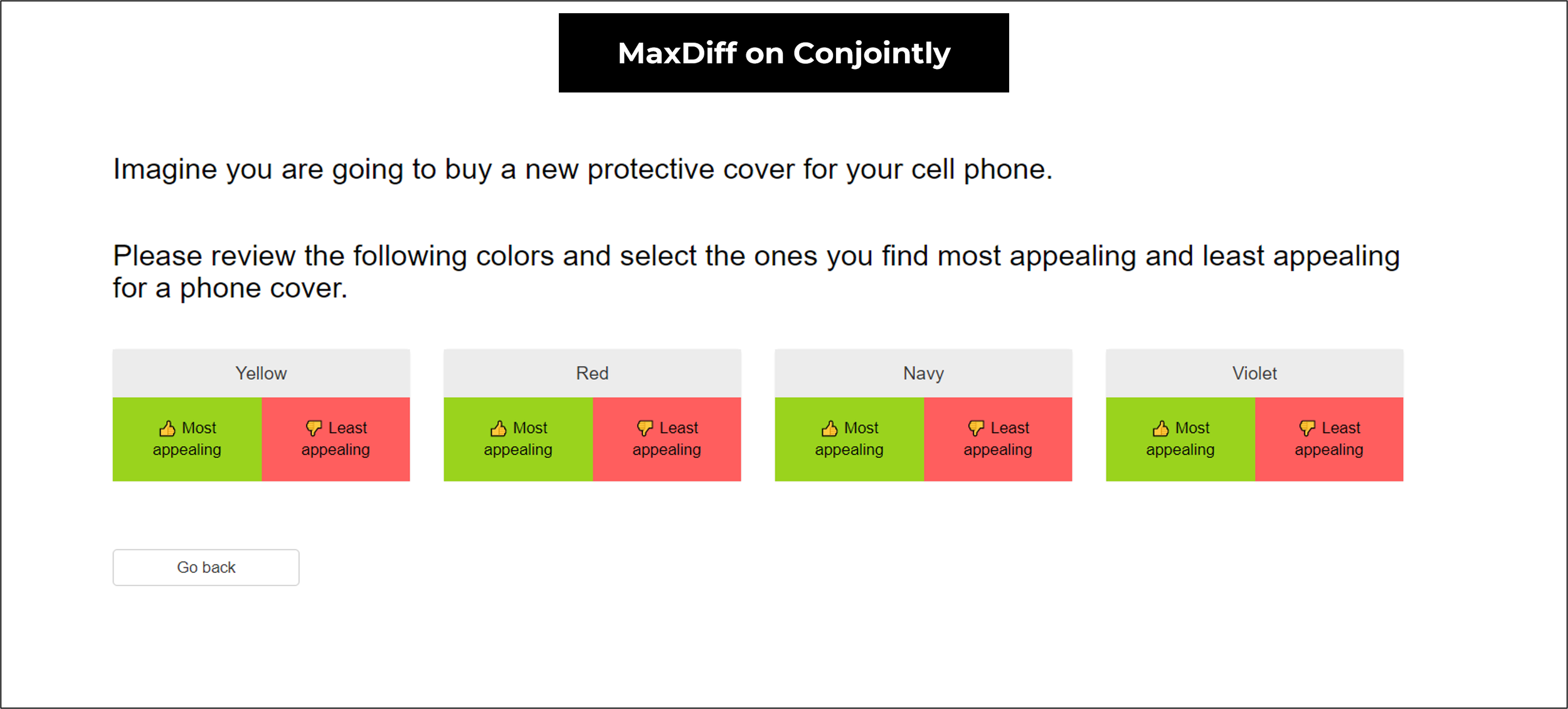

6. Conjointly ❌

Conjointly is an advanced market research platform that specializes in (yep, you guessed it) conjoint analysis software. A standard subscription for Conjointly includes a MaxDiff Analysis survey format for a base rate of $1795/user/year, however it does have quite an unorthodox user interface for voting as you can see in the screenshot above. It is worth noting that Conjointly is an advanced tool and you will likely need additional support services if you haven’t used something like it in the past.

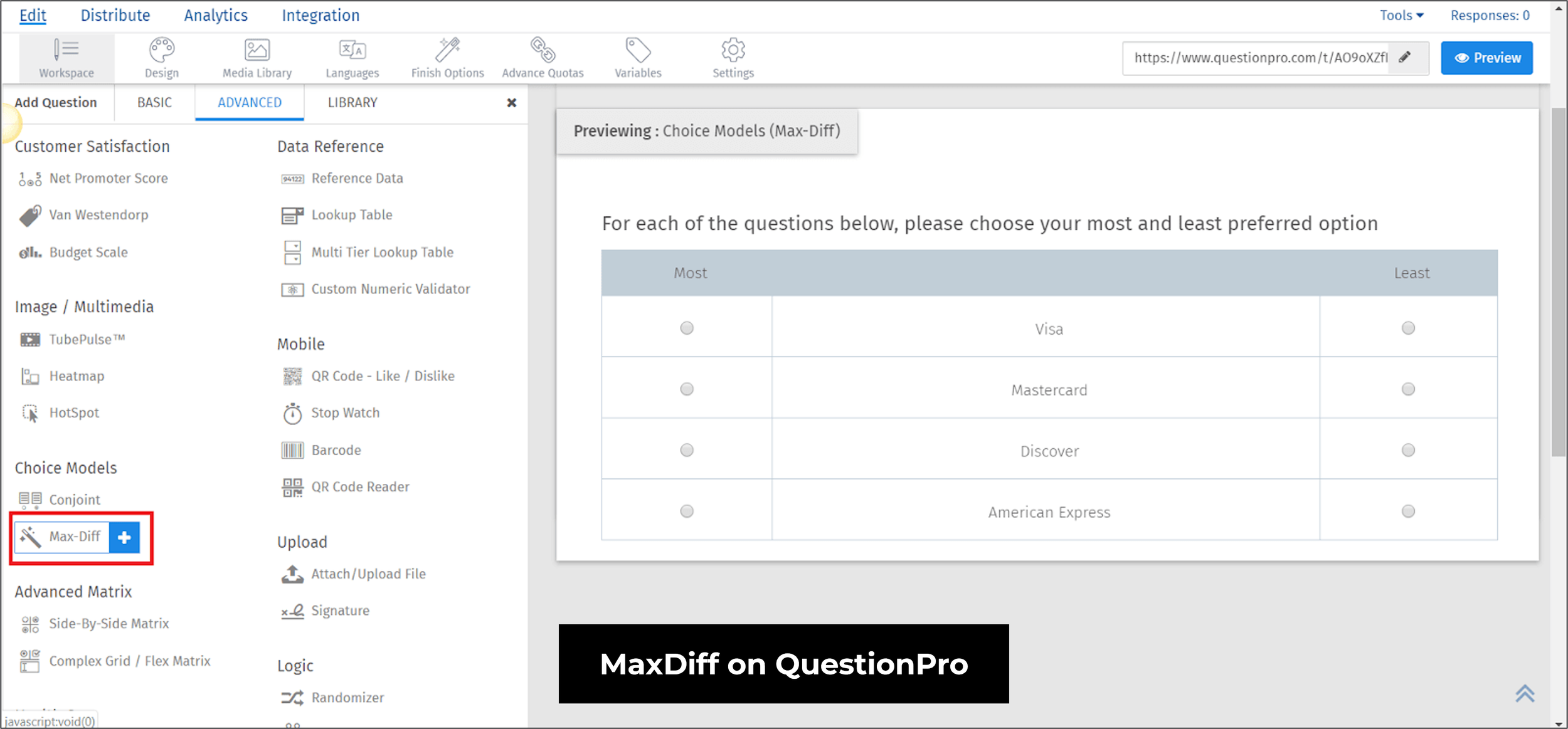

7. QuestionPro ❌

QuestionPro is another of the big survey platforms. It doesn’t meaningfully differ much from products like SurveyMonkey in functionality or price, it’s just another alternative really. QuestionPro offers MaxDiff and Conjoint Analysis questions only in their most expensive tier, which is called “Research Suite” and is available by custom quote only. However, their “Workplace” product starts at $5,000/year, so it is fair to assume that the Research Suite is minimum $10,000/year or more.

8. SurveyKing 🟠

SurveyKing offers a survey platform with a wide range of question types for a low price. Free users can create a test MaxDiff survey, however you can only add three ranking options, so really it’s just a preview of the survey design and can’t be used to actually rank anything. Strangely, there’s nothing preventing the same set of options from appearing many times for the same participant on SurveyKing, which is a red flag for survey usability and data integrity. To unlock MaxDiff for actual survey use, you’ll have to upgrade to the premium version for $19/user/month (which does come with a limited number of responses per month).

9. Alchemer ❌

Alchemer (formerly SurveyGizmo) is a survey platform that, in the company’s own words, “provides tools that rival Qualtrics at a price point closer to SurveyMonkey”. Alchemer offers a MaxDiff Analysis question type but it is only available on their “Full Access” plan (their most expensive tier) starting at $1895/user/year. There is no free trial available and “Full Access” is only for up to a maximum of 3 users — beyond that you’ll have to negotiate a custom enterprise contract.

10. Q Research Software / Displayr ❌

Q Research Software is a data analysis and reporting tool designed specifically for traditional market researchers. The tool is part of Displayr’s portfolio of data products. Q Research offers specialized functionality for this customer segment such as automated data cleaning, formatting, and statistical testing. Unfortunately they don’t have a free tier or even a publicly-available demo of their MaxDiff tool — you must purchase either their Standard License ($2235/year) or Transferable License ($6705/year) before getting your hands on it.

— — —

4 Alternatives to MaxDiff Analysis for Ranking & Prioritization Surveys

There are four alternative survey methods that you can use instead of MaxDiff Analysis:

Pairwise Comparison

Ranked Choice Voting

Points Allocation (aka Constant Sum)

Conjoint Analysis

Alternative 1: Pairwise Comparison

Pairwise Comparison ranks a list of options by comparing them in series of head-to-head pair votes. By counting the percentage of pairs an option “wins”, you can easily rank people’s preferences from best to worst option. Pairwise Comparison works almost identically to MaxDiff but with only 2 options instead of 3 or more, making the voting process a lot quicker and easier. OpinionX lets you create unlimited pairwise comparison surveys for free. It also has image-based pairwise comparison too!

— — —

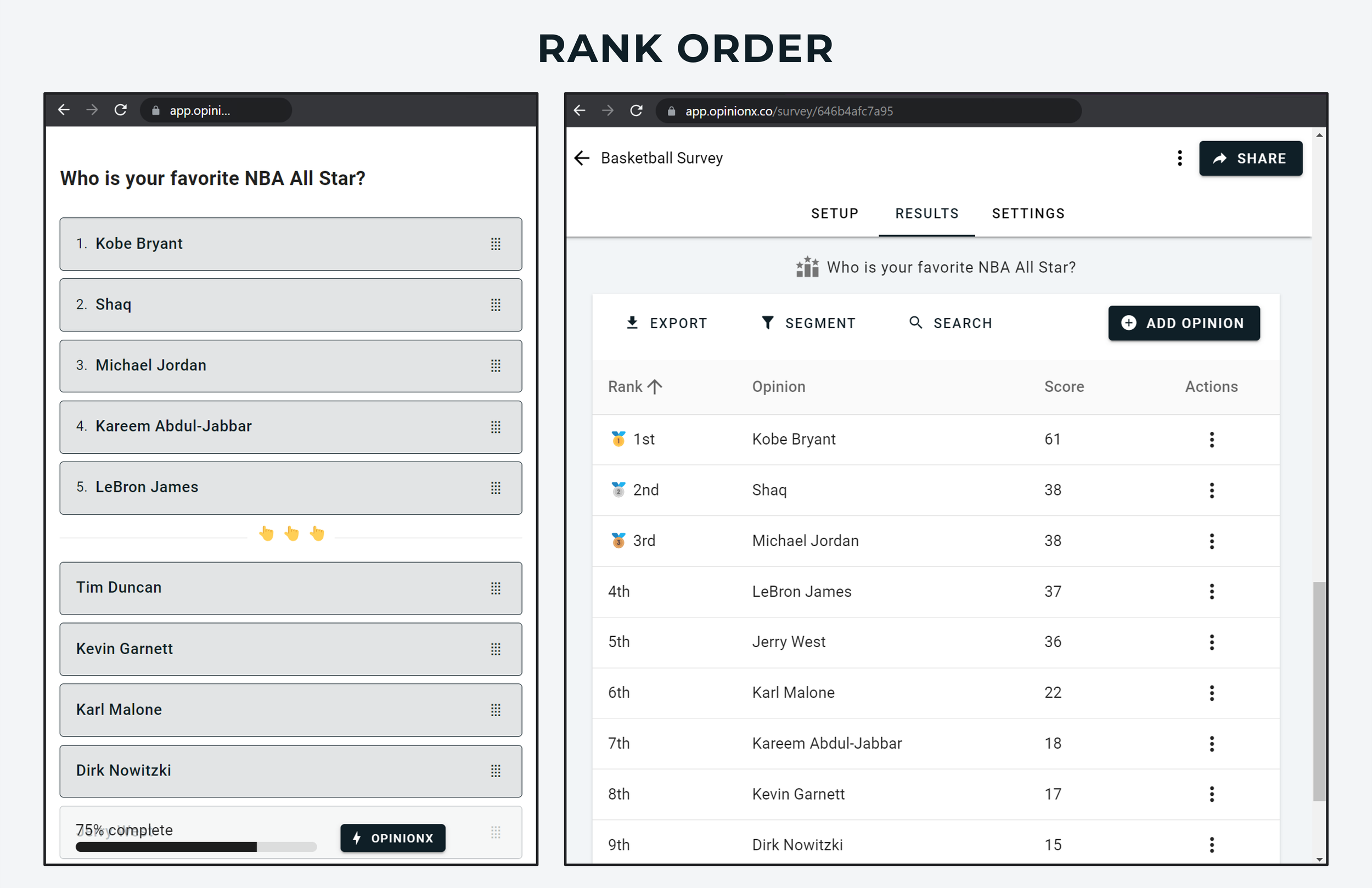

Alternative 2: Ranked Choice Voting

Ranked Choice Voting gives each respondent the full ranking list and asks them to place the options in order according to their personal preferences. It’s the most simple format of the four alternatives explained in this post, but it has some shortcomings worth noting. It is recommended that you limit the number of statements in a Rank Order question to 6-10 max. Beyond that number, you should move your question to MaxDiff or Pairwise Comparison, which are both better formats for ranking long lists. The free tier on OpinionX comes with a free ranked choice voting tool, which you can test in the embedded example below:

— — —

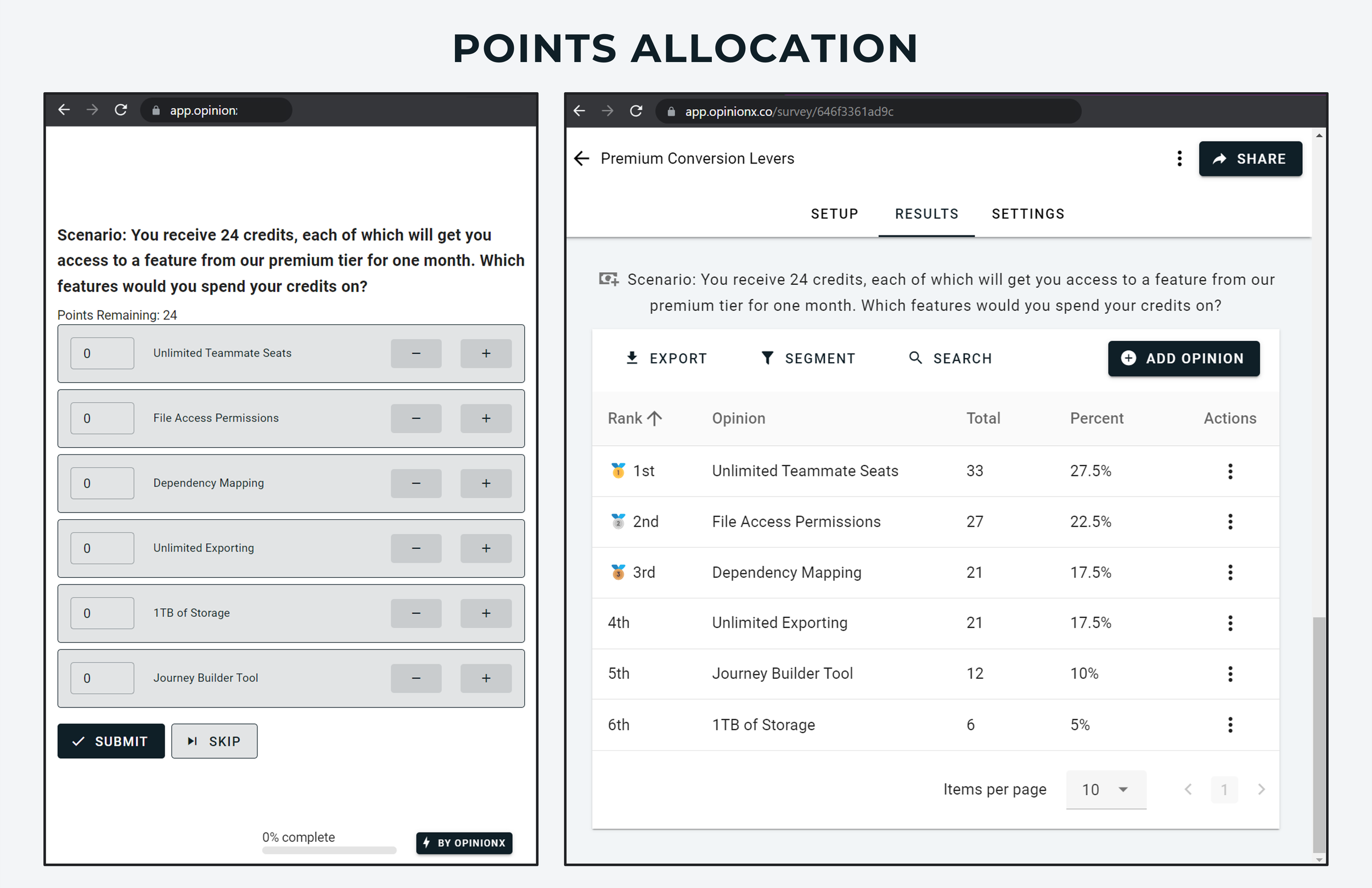

Alternative 3: Points Allocation / Constant Sum

One disadvantage of both MaxDiff and Pairwise Comparison is that they estimate the preferences of items relative to each other but don’t tell us if our list of ranking options is a good or bad batch from an absolute perspective. That’s where Points Allocation comes in (also known as a Constant Sum survey). It gives each participant a pool of credits they can allocate amongst options in whatever way best represents their personal preferences. It doesn’t just show the relative preference, it shows the magnitude of their preference. For example, Points Allocation helps us learn more than the fact that Simon prefers apples to bananas — we see that he would give 9 of his 10 points to apples (he really prefers apples). You can create free points ranking surveys on OpinionX like the example below:

— — —

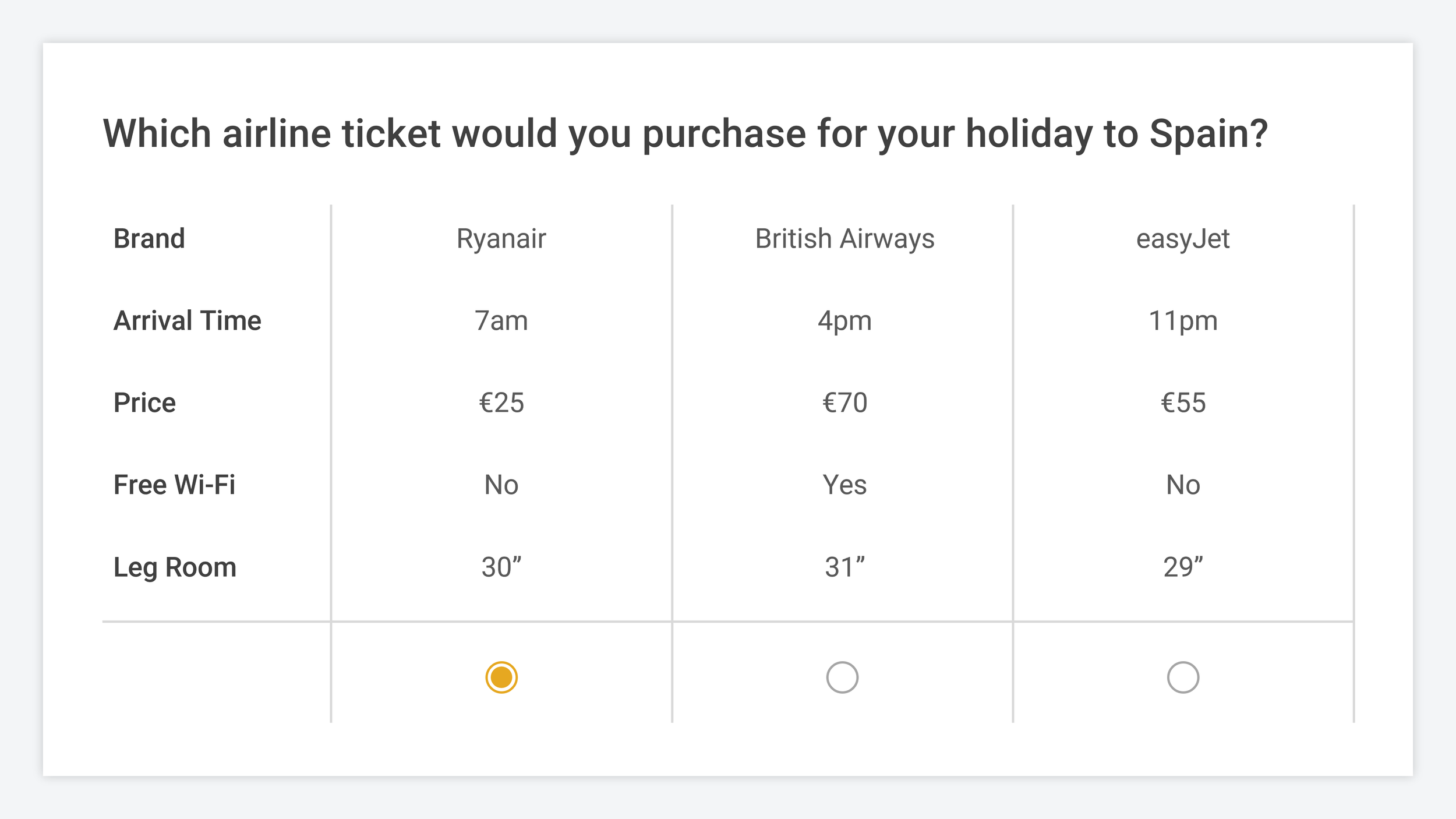

Alternative 4: Conjoint Analysis

Conjoint Analysis is a multi-factor ranking method that asks participants to vote on profiles that each contain multiple variables. It’s used to figure out how important different aspects/features of a product are in the overall offering for a buyer. For example, Conjoint Analysis will help us rank the importance of a phone’s battery life, storage space, and color, while at the same time helping us rank the various possible colors too (eg. black, white, rose gold).

As you can probably guess from that description, Conjoint Analysis is a lot more complex than MaxDiff Analysis or the other comparative ranking methods explained in this guide. As such, it is also a lot more expensive — see this breakdown of the most popular conjoint analysis tools and their prices (the tools range from $2000 to $30,000 per year).

Good news though! As well as being the only free survey tool for MaxDiff Analysis, OpinionX is also the number one free conjoint analysis tool too! Learn more about running Conjoint Analysis surveys on OpinionX.

— — —

Create A Free MaxDiff Analysis Survey

There’s only one tool that offers free MaxDiff Analysis surveys — and that’s OpinionX. On the free tier you can create unlimited surveys with all question types and analysis features unlocked, and test your MaxDiff survey setup and results with up to 10 participants before upgrading. If you’re running larger studies, OpinionX’s Analyze plan lets you scale up to 1 000 participants per survey with advanced export and segmentation features for $900/year. Try it out today for your next ranking exercise, user research project, or prioritization sprint.