Discrete Choice Experiment: Methods, Examples, Tools

Despite being widely used by companies like Google, Amazon, and Facebook for user research and pricing studies, Discrete Choice Experiments remain surprisingly under the radar amongst researchers, especially those outside of market research.

This guide breaks down everything you need to know about Discrete Choice Experiments, giving you the tools to run powerful, statistically sound studies that uncover what people really value. By the end, you’ll be confidently designing choice-based surveys and maybe even earning a reputation as your team's resident stats wizard.

Contents

2. Application

3. Use Cases

4. Methods

5. Tools

7. Examples

— — —

What is a Discrete Choice Experiment?

A Discrete Choice Experiment is any survey method that asks people to compare a set of options and make decisions based on their personal preferences and priorities. By analyzing a large number of these individual decisions, you can model how people make choices and what matters most to them.

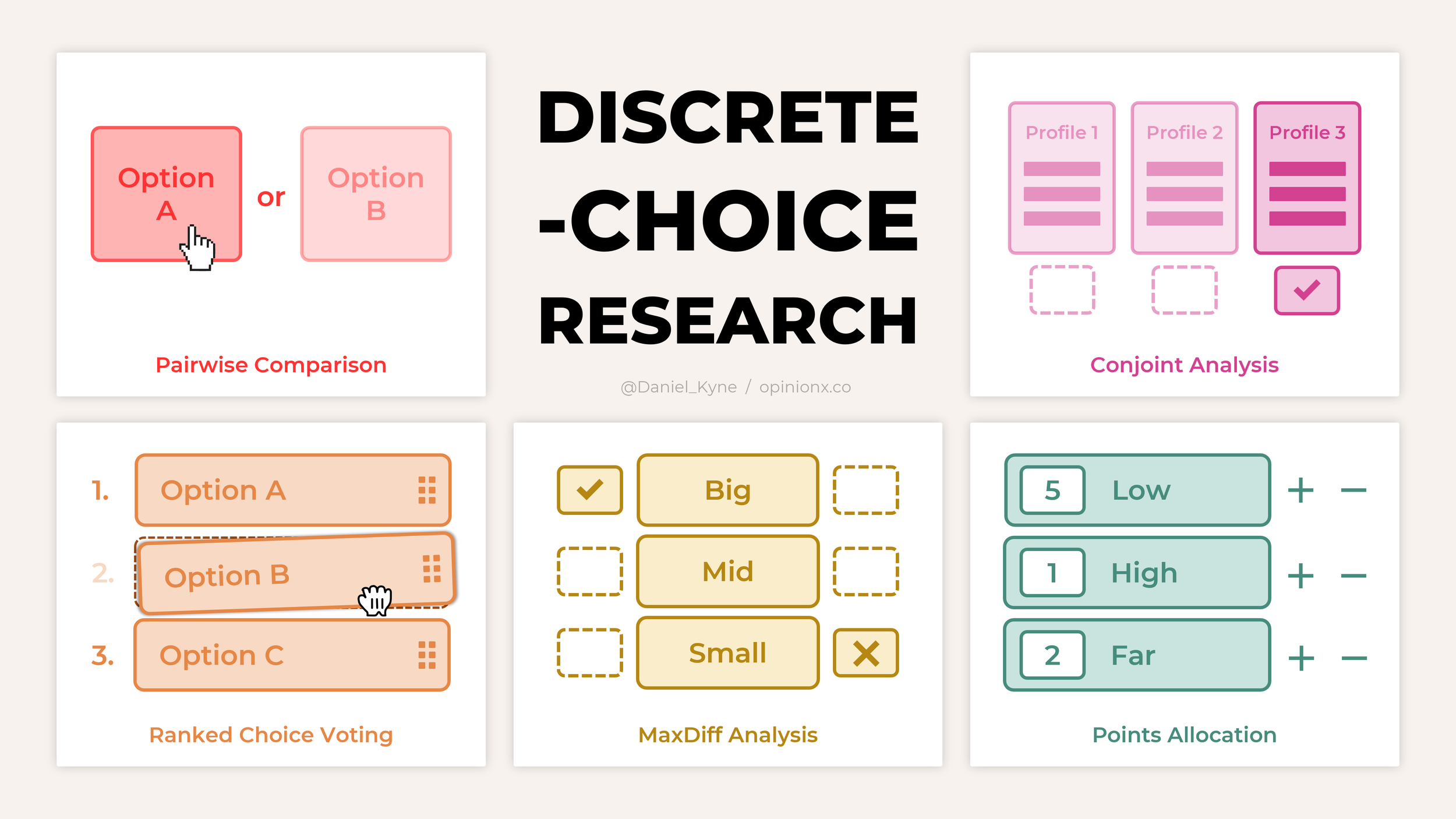

Many types of surveys fall under the umbrella of discrete choice experiments, ranging from simple head-to-head voting on two options at a time to more complex formats like conjoint analysis where respondents choose between profiles made up of different combinations of attributes such as price, color, or size.

What all of these methods have in common is simple — they present lists of distinct options (discrete) for participants to pick from (choice) and use those results to estimate how people would likely behave in similar real-world scenarios.

Many people mistakenly assume that discrete choice experiment is just another term for conjoint analysis, but that’s not true! Conjoint is just one type of discrete choice experiment. This guide walks through several different DCM formats, with clear explanations and examples for each.

— — —

What are Discrete Choice Experiments used for?

When trying to measure people’s preferences, researchers often fall back on rating scale questions like:

“Rate your satisfaction with ____ from 1 to 5 stars.”

But there’s a major flaw with rating scale questions — they let people give everything the same score. In fact, that’s one of the most common outcomes. Every option ends up looking equally important, making it nearly impossible to tell what people actually care about.

Discrete Choice Experiments are a better-suited approach to measure people’s preferences. They force respondents to make trade-offs, comparing options side by side and choosing the one that best reflects their preferences. This mimics real-world decision-making much more closely, where you have to weigh pros and cons and make a choice rather than say “everything!”.

— — —

When to Use Discrete Choice Experiments

Discrete Choice Experiments really shine in scenarios where you’re ranking things that don’t have an obvious or objective score.

It’s easy to rank movies by their Rotten Tomatoes rating or songs by Spotify streams. But what about more subjective questions, like:

What product features do users care about most?

What frustrations are most painful for your customers?

What benefits do clients seek most from our services?

These are exactly the types of messy, nuanced questions where Discrete Choice Experiments excel. They help you cut through vague ratings and get clear, structured insights about what people truly value based on how they actually make decisions.

That’s why product teams and UX researchers often use Discrete Choice Experiments to measure things like:

🏆 Preference — What do users like most?

🤕 Pain — What problems are most frustrating?

💰 Value — What features are worth most to users?

⚠️ Risk — What concerns or worries users the most?

🚧 Friction — What barriers stop users from taking action?

❤️🔥 Motivation — What drivers users to take action?

Because of this, Discrete Choice surveys are a go-to method for user research projects such as:

Customer Segmentation — Understand which types of customers care most about solving specific pains.

Roadmap Prioritization — Plan what to build next by identifying users’ top unmet needs.

Assumption Testing — Validate or disprove your hypotheses about user preferences.

Value Analysis — Identify which features your users perceive as most valuable.

Message Testing — Find out which messages resonate most with your target audience.

Pricing Studies — Quantify which features users are willing to pay for, and how much.

— — —

What survey formats are Discrete Choice Experiments?

Now that we’ve covered what Discrete Choice Experiments are and when to use them, let’s dive into the five most popular Discrete Choice survey methods, with real examples to help you choose the right one for your next study.

Here are the five most popular discrete-choice survey methods:

Conjoint Analysis — Choose the most appealing ‘profile’ to reveal the importance of each attribute (eg. price, color, size) and the range of potential options within each attribute (eg. red, green, blue for color).

MaxDiff Analysis — Pick the best and worst options from a series of sample sets (usually 3-6 options at a time) to uncover people’s preferences.

Pairwise Comparison — Compare two options at a time in a series of head-to-head battles.

Ranked Choice Voting — Rank all options in order of personal preference to reveal collective priorities.

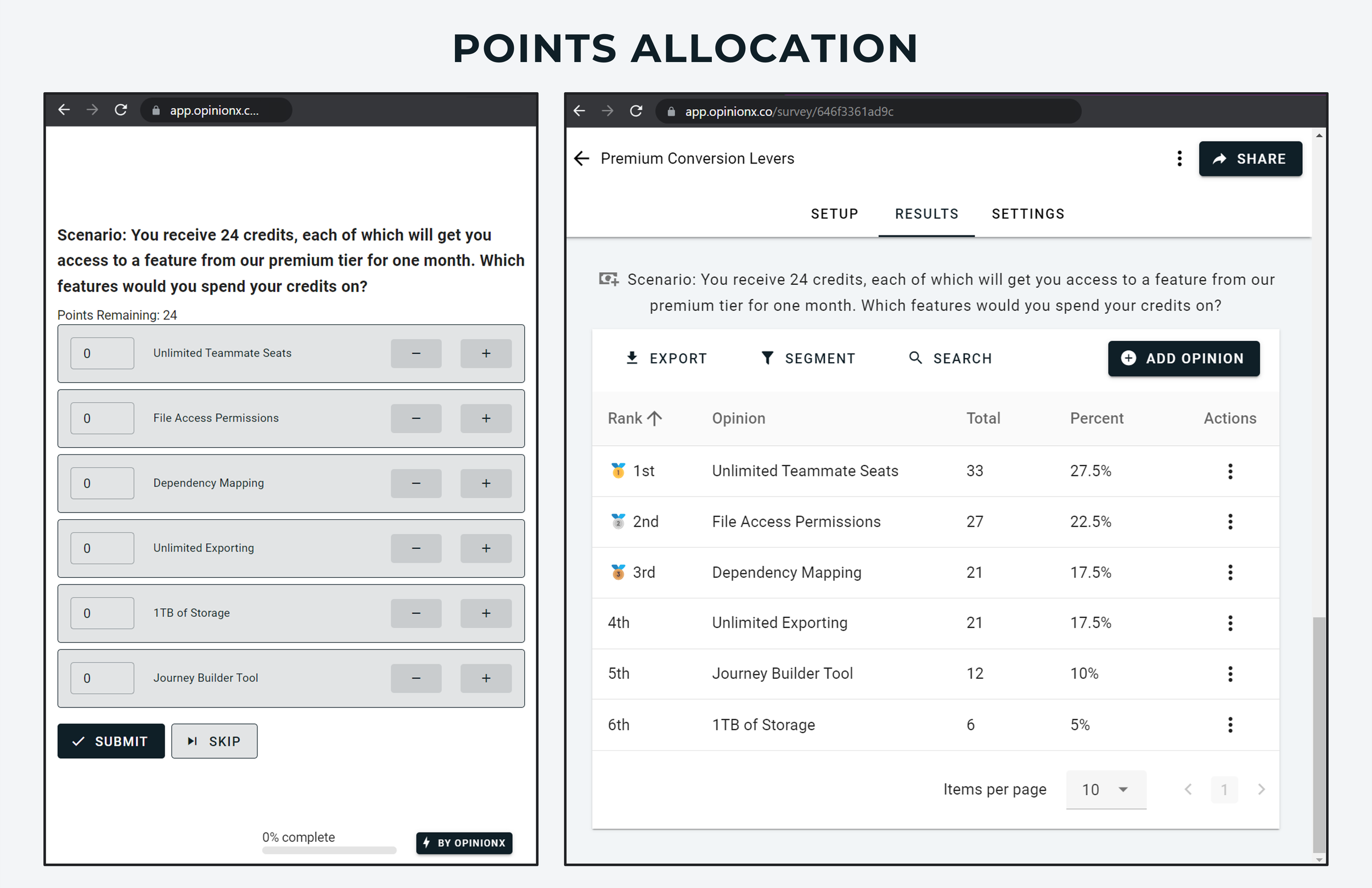

Points Allocation — Distribute a limited number of points across a list of options to show how strongly people prefer each one.

— — —

Method 1: Conjoint Analysis

Conjoint Analysis is ideal when you’re ranking the importance of things that have multiple layers of information.

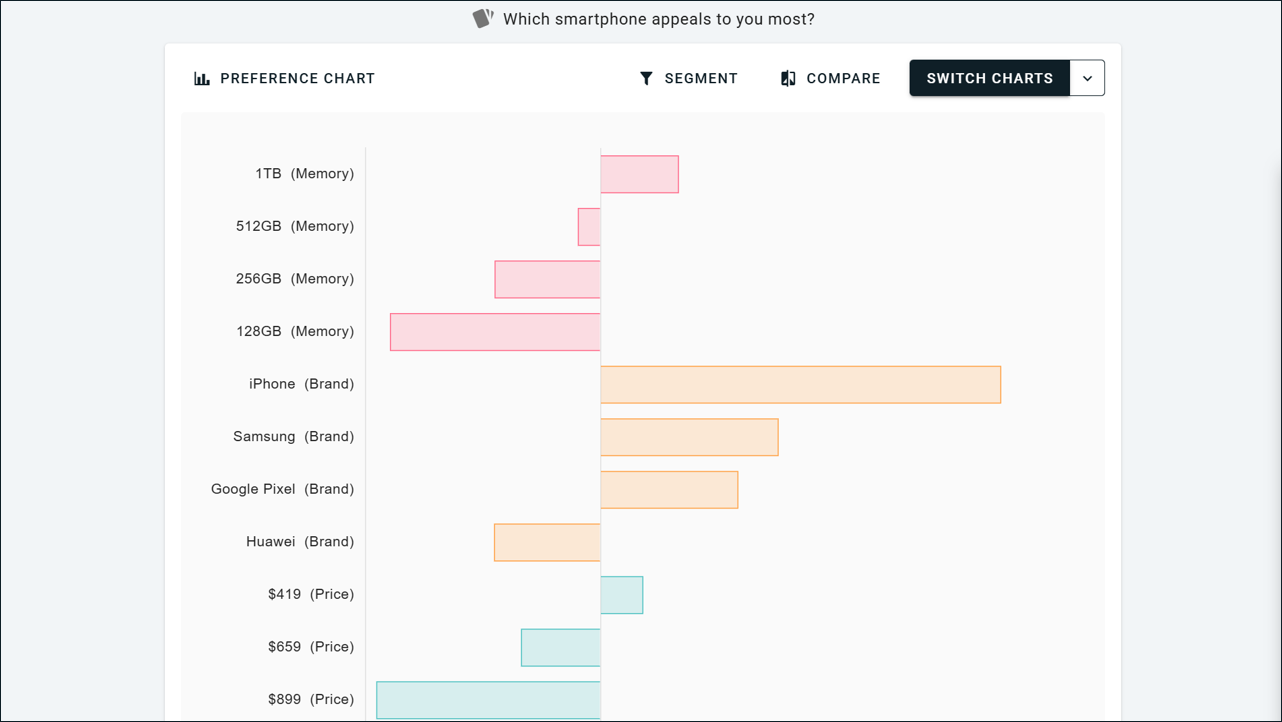

Let’s say you're evaluating smartphones. Each phone has multiple attributes (like brand, storage, color, battery life) and within each attribute there are various options (eg. iPhone, Samsung, or Google Pixel could be options within the Brand attribute).

Conjoint Analysis helps you answer two questions:

Which attributes influence people’s decisions the most?

Which specific options within each attribute are preferred most?

How does conjoint analysis work?

Participants are shown sets of 2-5 profiles at a time. Each profile includes one option from each attribute. The participant’s task is to pick the profile they prefer most in each round. After a few rounds, the system has enough data to estimate which attributes matter most and which options are driving their decisions.

Here’s a real example of conjoint analysis in action, showing how profiles are displayed during the survey:

It might sound complex, but conjoint analysis is surprisingly easy to set up!

Below you can see how I created the survey from the GIF above. As you can see, it’s just a set of attribute names along with the options for each attribute listed beneath it. This screenshot is from OpinionX, a survey platform that includes conjoint analysis on its free tier.

Want to go deeper into conjoint? Here are some helpful resources:

Most conjoint tools are surprisingly expensive, for example:

Conjointly = $2,000 per user per year

Sawtooth = $4,500 per user per year

Qualtrics = $20,000+ per year

OpinionX is the only survey platform offering full conjoint analysis capabilities completely free, including:

Unlimited surveys

Unlimited participants

All setup customizations

Here’s an example of Conjoint Analysis results taken from OpinionX:

Try it for yourself and create a conjoint analysis survey for free on OpinionX.

— — —

Method 2: MaxDiff Analysis

MaxDiff Analysis surveys show 3-6 options at a time and ask respondents to identify the “best” and “worst” in each set. By aggregating responses, MaxDiff ranks all options from highest to lowest preference. On a survey tool like OpinionX, you can change the “Best” and “Worst” labels to whatever you’d like, such as Most/Least Liked, Top/Bottom Priority, Love/Hate, etc.

MaxDiff is great for ranking long lists of options by breaking them down into more manageable chunks. It’s often used in product management to involve customers in voting when trying to prioritize features or problem statements.

Here’s an example of the results from a maxdiff survey on OpinionX:

While Maxdiff surveys are quite straightforward, it has a reputation as an advanced research method and is often priced accordingly.

OpinionX is the only survey tool that offers maxdiff surveys for free with unlimited participants per survey.

Other than OpinionX, the next cheapest maxdiff survey tools cost $4000-$5000 per year.

— — —

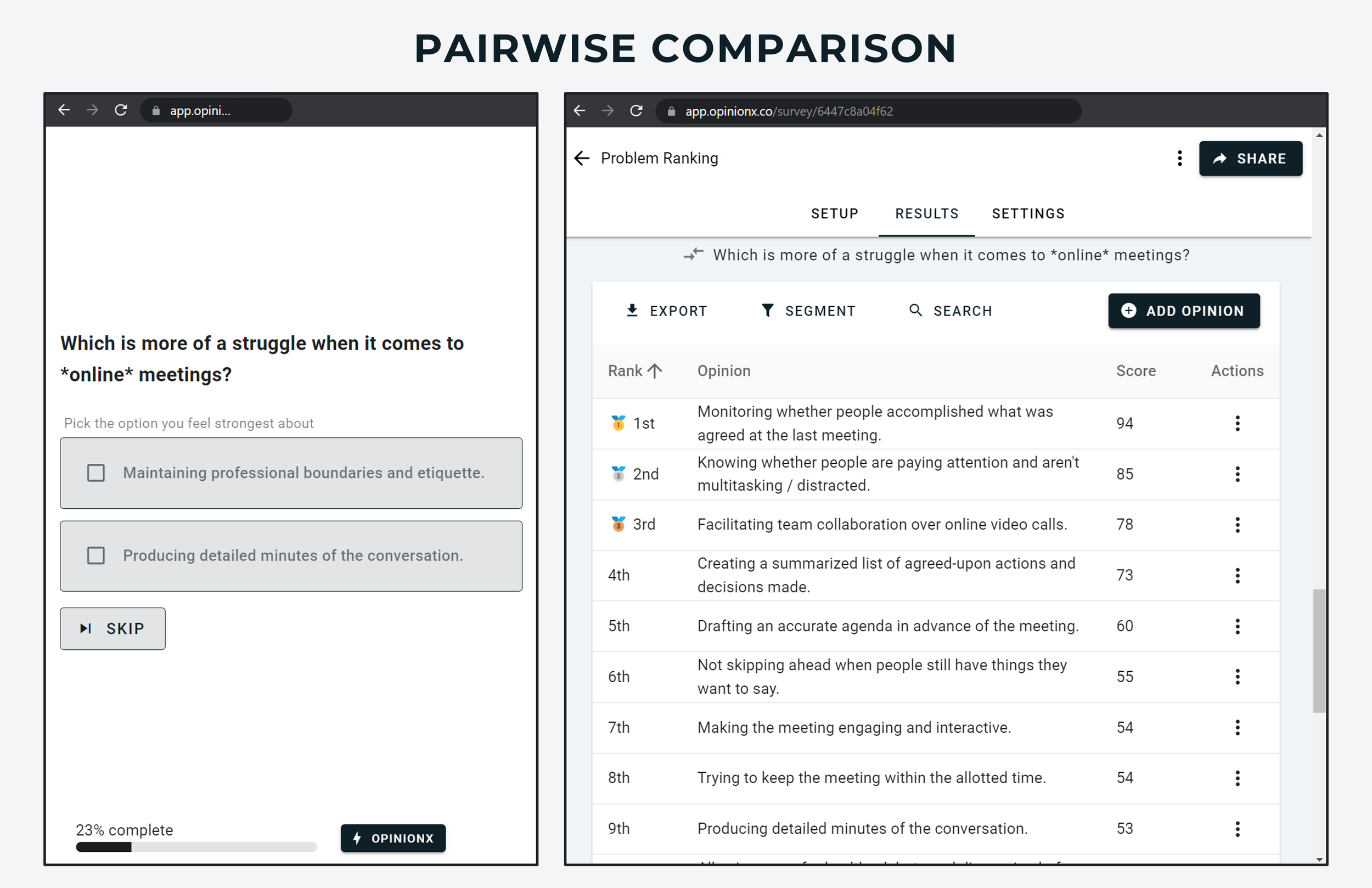

Method 3: Pairwise Comparison

Pairwise Comparison is a simple discrete choice method where participants compare two options at a time and choose their preferred one..

This binary format is fast and scalable, even for long lists of options. It's an ideal method when drag-and-drop voting or rating scale questions would be too long or overwhelming.

Results are analyzed using a win rate (ie. the percentage of pairings each option won). You can create Pairwise Comparison surveys for free on OpinionX, which offers unlimited surveys and unlimited participants per survey on its free tier. Here’s an example of the results for a Pairwise Comparison survey on OpinionX:

— — —

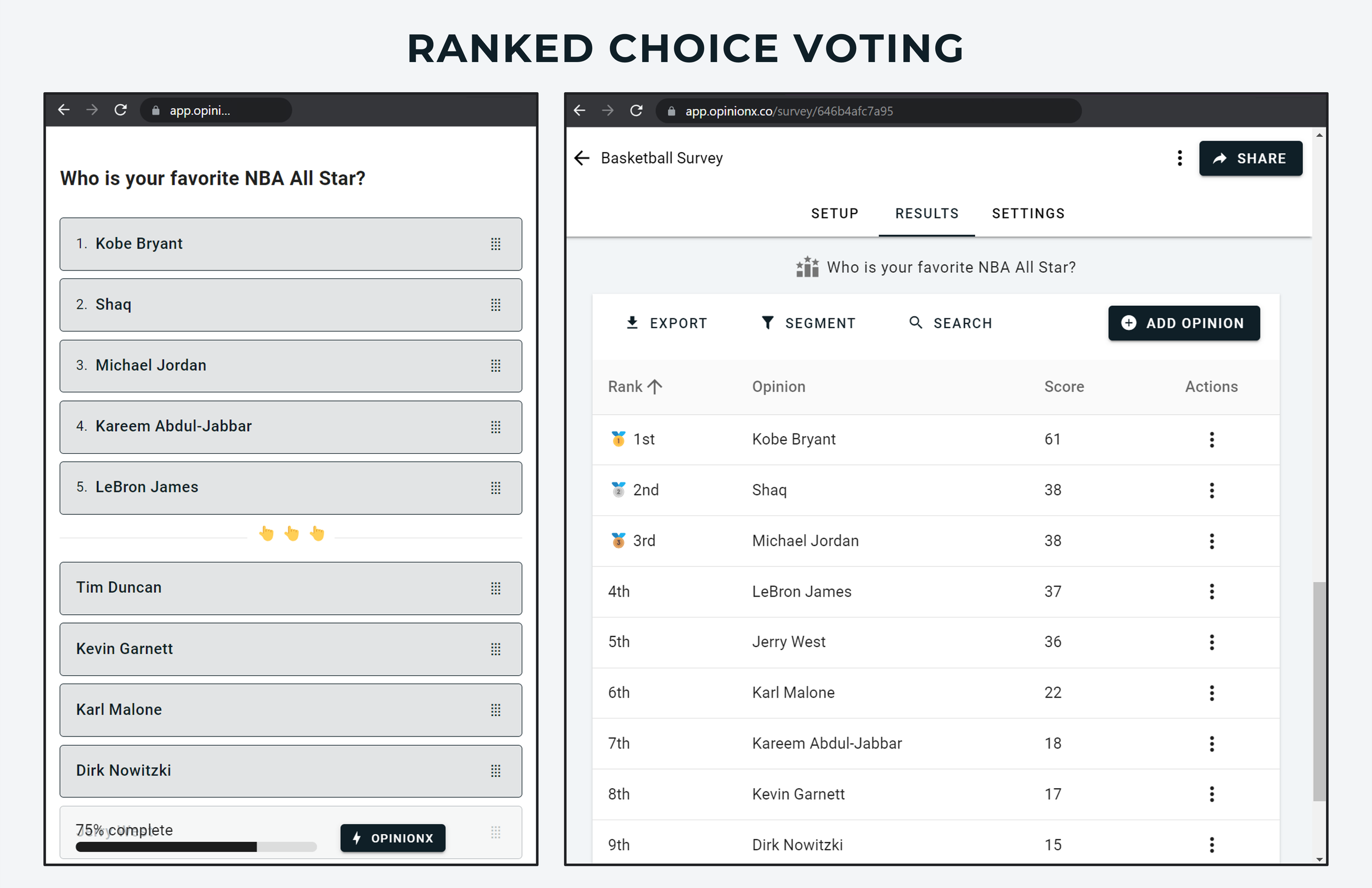

Method 4: Ranked Choice Voting

The best-known survey format for ranking people’s preferences, Ranked Choice Voting asks participants to rank the full list of options by personal preference:

You should only use Ranked Choice Voting if your list contains no more than 10 options (ideally just 3-6 in total). Lists longer are difficult to rank all in one go, especially on phones, and are more likely to cause junk voting.

Ranked Choice Voting is a free question type available on OpinionX surveys under the name “Order Rank”. Here’s a screenshot example of drag-and-drop ranking and the results page on a free OpinionX ranking survey:

— — —

Method 5: Points Allocation

Points Allocation surveys give each participant a pool of points and ask them to freely allocate them across the list of options in whatever way they want. It's particularly useful in budget allocation studies or when exploring trade-offs between priorities.

Unlike Ranked Choice Voting, which focuses on the order of people’s preferences, Points Allocation measures the intensity of people’s preferences.

For example, rather than just ranking ice cream flavors like Chocolate 1st, Vanilla 2nd, and Strawberry 3rd, I could allocate 9 of my 10 points to Chocolate, 1 point to Vanilla, and 0 points to Strawberry. This distribution of points tells you a lot more about how important each option is to me, not just the order of my preferences.

“Points Rank” is a free question type available on OpinionX, where you can set a custom number of points and include as many options in your voting list as you’d like.

— — —

Which Discrete Choice Experiment should I choose for my ranking survey?

The five Discrete Choice Experiments covered in this blog post fall into three categories:

Full List Ranking, where you are given the entire list to rank at once, such as Ranked Choice Voting and Points Allocation.

Sampling Exercises, where you’re asked to vote on a series of option sets that get added together to form the overall result, such as MaxDiff Analysis and Pairwise Comparison.

Multi-Variable Scenarios, where you’re trying to rank a list of things that each have a range of options, which is only possible via Conjoint Analysis.

Full List Ranking

If you only have 3-6 options to rank, then Points Allocation or Ranked Choice Voting are easiest. Points Allocation is better for surfacing the magnitude of people’s preferences, not just the order of preference like in Ranked Choice Voting.

Sampling Exercises

To rank a longer list of options (10+), MaxDiff and Pairwise Comparison are the best choices. Both are quite interchangeable really. If your options are quite complex, I’d recommend using Pairwise Comparison to keep things lighter for the respondent. If you need to gather a lot of data from a smaller pool of people, MaxDiff Analysis is a better choice as it collects data faster by showing 3-6 options at a time instead of just 2.

Multi-Variable Scenarios

When you’re trying to rank a list of categories as well as a range of options within each category, then Conjoint Analysis is the only way to go. An example of this is the pricing plans for survey tools — each pricing plan offers the same list of features but with different limits/allowances. For example, you could have limits like surveys per month (1, 10, unlimited), number of researcher seats (1, 3, 5, unlimited), and participants per survey (100, 500, unlimited). Conjoint is the only way you can rank these categories and options in a survey.

If you’re still not sure which option to use but you have a survey already in mind, book a demo and we’ll help you get your first discrete-choice survey set up on OpinionX.

— — —

Examples of Discrete Choice Experiments being used

i. Customer Problem Stack Ranking

The reason I’m writing this post in the first place is to spread the word about discrete-choice research methods after seeing first-hand the value they offer.

Back in early 2021, 6-months after launching OpinionX, we lost our only paying customer at OpinionX. We had interviewed 150+ people trying to figure out what problem our startup should solve but clearly weren’t making progress. We decided to run a discrete choice experiment — we wrote a list of 45 problem statements and sent them to 500 target customers that we found in Slack communities as a pairwise comparison survey.

Within 2 hours, we could see that the problem statement we had built our whole website and product around was ranked dead last as the least important problem! But, luckily, 5 of the top 7 problems were actually things that our MVP could do, so we quickly pivoted everything to focus on these high-priority pain points instead. Within a week of this experiment, we had our first 5 paying customers. Check out the full story behind this discrete choice experiment in this video:

ii. Psychographic Customer Segmentation

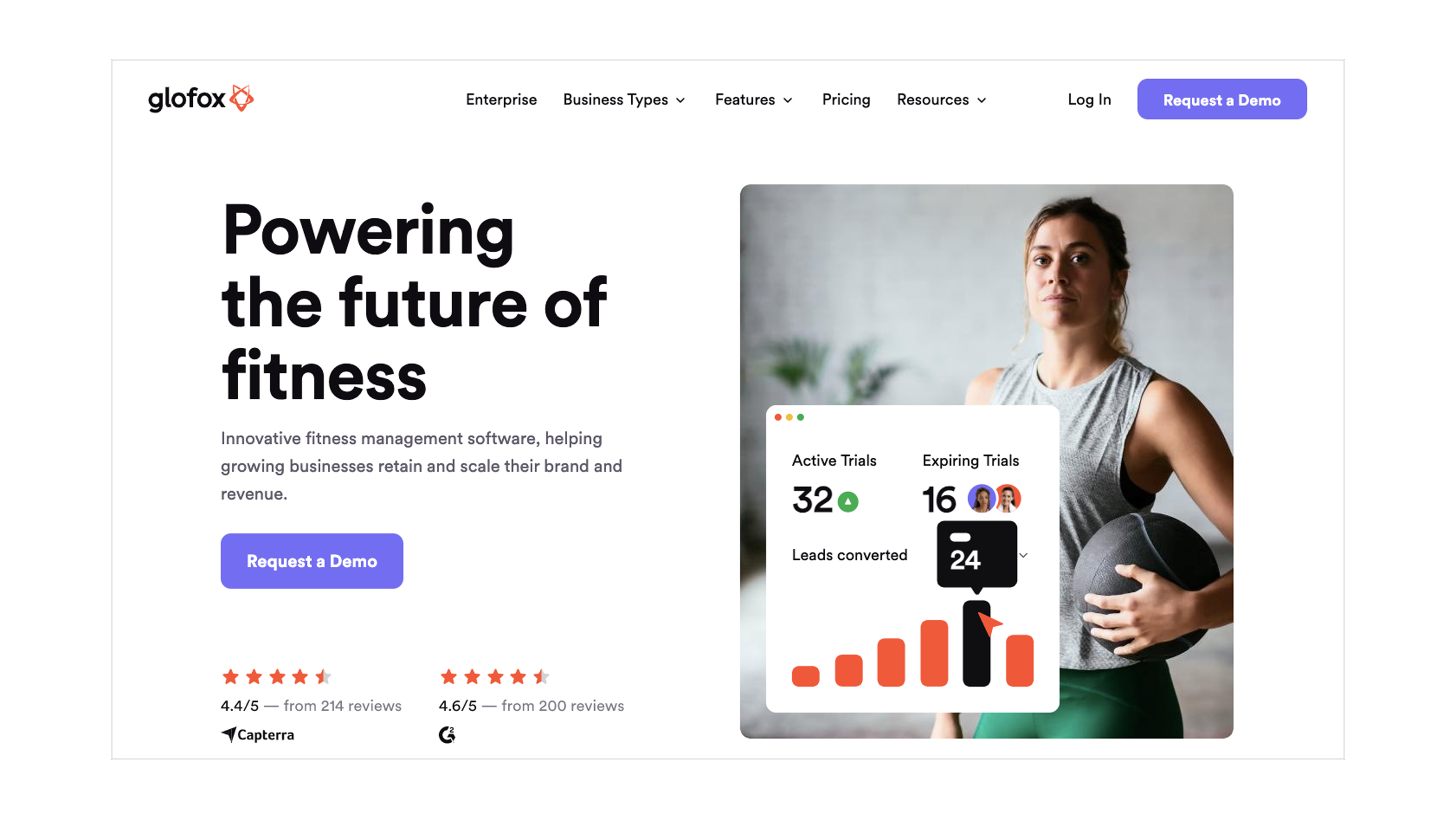

Thousands of gyms around the world, from small family studios to national franchises, use Glofox to schedule their classes, manage memberships, track attendance rates, automate payments, and more.

Francisco Ribeiro, a Product Manager at Glofox, was working on a new feature for Glofox during the summer of 2021 and had already conducted a bunch of user interviews to understand the customer need that this new feature would address. But there was a problem; Francisco couldn’t spot a clear pattern in the needs that customers were talking about during these interviews.

In one interview, a customer would complain about not being able to track engagement with their members and then the next interviewee would say that they have no problem tracking engagement, but that their main challenge was knowing whether members were churning or not.

When we first talked to Francisco, he was taking a step back and had recognized that he was dealing with some frustrating inconsistencies. He decided to run a discrete choice survey on OpinionX to measure which needs his customers felt strongest about. By the end of that same week, Francisco had figured out the cause of his confusion — the size of the customer’s business determined the highest-ranked problem!

Using OpinionX to get customers to vote on their needs via pairwise comparison, Francisco split participants into different groups based on the size of their gym operations to see the ranked results separately for each customer segment. He then calculated Glofox’s bottom-line financial impact for each segment’s highest-ranked problem to understand which one to focus on solving first. With this information on hand, he was able to inform his roadmap prioritization with real data and could easily explain his rationale to the rest of his team.

— — —

Getting Started with Discrete Choice Experiments

All five of the survey methods covered in this blog post are available on OpinionX for free, along with unlimited surveys, unlimited collaborators, and unlimited participants per survey — you only pay for OpinionX if you want advanced analysis features like segmentation filtering.

OpinionX is a free research platform for running discrete choice surveys. It comes with purpose-built analysis that lets you filter your results and compare how people’s preferences change by customer segment. Thousands of teams including from companies like Google, Disney, and LinkedIn use OpinionX to better understand people’s priorities.

If you enjoyed this guide, subscribe to our newsletter Full Stack Researcher for actionable user research advice that we share with thousands of product managers, user researchers, and startup founders: